Architectural Considerations for

Self-replicating Manufacturing Systems

J. Storrs Hall*

Institute for Molecular Manufacturing

Palo Alto, CA 94301 USA

This is an abstract

for a presentation given at the

Sixth

Foresight Conference on Molecular Nanotechnology.

The full article is available at http://www.foresight.org/Conferences/MNT6/Papers/Hall/index.html.

To achieve the levels of productivity that are one of the desirable prospects of a molecular manufacturing technology, the techniques of self-reproduction in mechanical systems are a major requirement. Although the basic ideas and high-level descriptions were introduced by von Neumann in the 1940's, no detailed design in a mechanically realistic regime ("the kinematic model") has been done. In this paper I formulate a body of systems analysis to that end, and propose a higher-level architecture somewhat different than those heretofore seen, as a result.

The Necessity of Self-replication in Molecular Manufacturing

Without the productivity provided by self-replication, molecular manufacturing, specifically robotic mechanosynthesis, would be limited to microscopic amounts of direct product. Self-replicating systems thus hold the key to molecular engineering for structural materials, smart materials, the application of molecular manufacturing to power systems, user interfaces, general manufacturing and recycling, and so forth.

The cellular machinery of life seems to be an existence proof for the

concept of self-replication. Indeed, many proposed paths to nanotechnology

involve using it directly. However, it is limited in types and specificity

of reactions as compared with mechanosynthesis, and thus in the speed,

energy density, and material properties of its outputs.

Alternatively, one could select from the existing productive machinery

of the industrial base, augmented with robotics to close a cycle in which

factories build parts, which other factories build into robots, which build

factories and mine raw materials. This is the approach of the 1980 NASA

Summer Study (Frietas [1980]). This is clearly a useful avenue of inquiry;

but the proposed system is very complex and is based on bulk manufacturing

techniques.

It seems likely that there are simpler and more direct architectures

for self-reproducing machines, ones dealing in eutactic mechanosynthesis,

rigid diamondoid structures, stored-program computers, and so forth. There

is, however, a really remarkable lack of even one detailed design in what

von Neumann called the "kinematic model". (see Friedman (1996) vis a vis

Burke (1966)). (There is no lack of designs in various cellular automata,

and other "artificial life" schemes, but those have all abstracted far

enough away from the problems of real-world construction that they are

not directly relevant to the the design of actual manufacturing systems.)

Self-replicating Systems: Analysis

Consider a system composed of a population of replicating machines.

Each machine consists of control and one or more "operating units" capable

of doing primitive assembly operations (e.g. mechanochemical deposition

reactions). Let us define the following:

p(t) -- population at time t

g -- generation time (seconds to reproduce)

a -- "alacrity" primitive assembly operations

per second

s -- size in primitive operations to construct

n -- number of primitive operating units

Then  and and  . .

There are some interesting implications of even so simple a model.

First, if a replicator based on a single universal constructor is redesigned

to have two cooperating constructors so it builds twice as fast, but in

doing so it is made twice as complex, the growth rate is unchanged. On

the other hand, suppose the architecture is enhanced by adding constructors

that are special-purpose and thus both simpler and faster. As long as all

units can be kept busy, na increases faster than s, decreasing

g. Therefore there is no use for raw parallelism in a replicator,

but specialization (the assembly line) can be a viable optimization technique.

Assuming that the replicators can be diverted at any point to perform

at full efficiency on some task of size ST, the total time to finish

the task can be given as a function of the number of generations allowed

to complete before the diversion:

Or, factoring out g to obtain the time in generations,

Or, factoring out g to obtain the time in generations,

Which has a minimum at

Which has a minimum at

Or, in other words, divert to direct construction when the total size of

all replicators (s 2k) reaches 69% of the size

of the task to be done. (In practice, needing an integral number of generations,

evaluate TT at the floor and ceiling of the indicated

continuous value.)

Or, in other words, divert to direct construction when the total size of

all replicators (s 2k) reaches 69% of the size

of the task to be done. (In practice, needing an integral number of generations,

evaluate TT at the floor and ceiling of the indicated

continuous value.)

Architectural Variations

Let us consider tradeoffs between architectures at various levels of complexity. To highlight the phenomena of interest, I will describe systems at the extremes of a spectrum. Suppose we have plans for two possible replicating systems, one simple and one complex. The simple one consists of a robot arm and a controller which is just a receiver for broadcast instructions (such as described by Merkle (1996)). The complex one consists of ~100 molecular mills that are assembly lines for gears, pulleys, shafts, bearings, motors, struts, pipes, self-fastening joints, and so forth; its architecture also includes conveyors and general-purpose manipulators for putting parts together.

Drexler's nanomanipulator arm (Drexler 1992, p. 400 et seq) is 4x106 atoms without base or gripper or working tip (or motors). With the addition of motive means, a controller, and some mechanism for acquiring tools for mechanosynthesis, it seems very unlikely that a simple system could be built at less than 1x107 atoms. The arm will need a precision of approximately 1x104 steps across its working envelope to build itself and controller by direct-in-place deposition reactions. Let us assume that it takes an average of 1x104 steps per deposition, including the time to discard and refresh tools. Let us assume that it can receive 1x106 instructions per second, and can take one step per instruction.

One could design a faster arm, e.g. with internal controllers, but

it would be more complex, and we are delineating an extreme of the design

space here. Similarly, one could probably get by with fewer steps across

the envelope, but the simplest way to achieve positional stability is to

gear the arm down until the step size is small compared to other sources

of error.

The simple system must execute 1x1011 steps to reproduce itself, and can do so in 1x105 seconds (about 28 hours). In order for it to reach the capability to produce macroscopic products, which we will arbitrarily define as the ability to do 1x1020 deposition reactions per second, it must have a population of 1x1018 (each individual can do 1x102), which implies 60 generations, about 69 days.

An offhand, intuitive comparison with existing industrial systems suggests that it should be possible to build special-purpose "molecular mill" mechanisms for the primitive operations that are ten times less complex than a robot arm (there are of course many such primitive operations in a given industrial machine). If we assume that stations in such a machine can be ten nanometers apart, with 1x106 atoms per station, and that internal conveyor speeds can be 10 m/s, a mill capable of building a 1x104-atom part would contain 1x1010 atoms (1x106 parts) and would produce 1x109 parts per second, or its own parts-count equivalent in a millisecond. (This is somewhat faster than the "exemplar system" of Drexler, but again we are seeking an extreme).

Suppose such systems could be built from 100 kinds of parts, and assume that we must double the complexity of the system to allow for external superstructure and fully pipelined parts assembly. Then the complex system as a whole consists of 2x1012 atoms and could reproduce itself in 2 milliseconds. Since it produces at a rate of 1x1015 atoms per second already, it needs only 17 generations, or 34 milliseconds, to reach macroscopic capability.

Iterated Design Bootstrapping

Given a single "hand built" simple system, we could:

- Build a complex system immediately atom by atom. This would take 2x1010 seconds (over 600 years).

- Reproduce simple systems until, 69 days later, one has macroscopic capability.

- Simply assume that building the first complex system is the goal, and employ the formula above; it gives an optimum of 17 generations. This results in a first, reproductive, phase of 472 hours, resulting in 131072 assemblers, which build the complex system in a second phase of 42 hours, for a total of 21 and a half days.

This has implications with respect to an optimal overall approach to bootstrapping replicators. Suppose we have a series of designs, each some small factor, say 5.8, more complex, and some small factor, say 2, faster to replicate, than the previous. Then we optimally run each design 2 generations and build one unit of the next design. As long as we have a series of new designs whose size and speed improve at this rate, we can build the entire series in a fixed, constant amount of time no matter how many designs are in the series. (It's the sum of an arbitrarily long series of exponentially decreasing terms. Perhaps we should call such a scheme "Zeno's Factory".)

For example, with the appropriate series of designs starting from the simple system above, the asymptotic limit is a week and a half. (About 100 hours for design 1 to build design 2, followed by 50 hours for design 2 to build design 3, plus 25 hours for design 3 to build design 4, etc.) Note that this sequence runs through systems with a generation time similar to the "complex system" at about the 25th successive design. Attempts to push the sequence much further will founder on physical constraints, such as the ability to provide materials, energy, and instructions in a timely fashion. Well before then we will run into the problem of not having the requisite designs. Since all the designs need to be ready essentially at once, construction time is to all intents and purposes limited by the time it takes to design all the replicators in the series.

Architectural Implications

This sequence has implications for the architecture of replicating systems. Since each replicator will build others of its own type for only a few generations and then build something else, a design which is optimized to allow the unitized assembler to build another exactly like itself is not optimal for the process as a whole. Similarly, a design which follows the logic of bacteria, floating autonomously in a nutrient solution, is likely not optimal.

A more efficient scheme, given the desiderata above, is to have the entire population in a common eutactic environment, and remain physically attached to each other and a substrate. The major new concern for the architecture in this approach is logistics: distributing raw materials (and information) in a controlled way, and building the infrastructure which does so and incidentally forms a framework for the ultimate product. Given such an infrastructure, productive units need not be individually closed with respect to self-replication.

This significantly relaxes the constraints on the design of any unit

in a beneficial way. Current practice in solid free-form fabrication

(see, e.g., Jurrens 1993) suggests that machines of moderate complexity

should be considered capable of making parts smaller than themselves, but

not machines their own size. This suggests a two-phase architecture

consisting of

- parts factories (general or special purpose -- parts accretion by mechanochemical deposition reactions is clearly a variant of solid freeform fabrication)

- motile construction units (again general or special purpose; an early breakdown might be transport and assembly) which build infrastructure, more construction units, and more factories, from parts

The remainder of the full paper presents a specific architectural proposal

(and bootstrap procedure) which exemplifies these principles, in some detail.

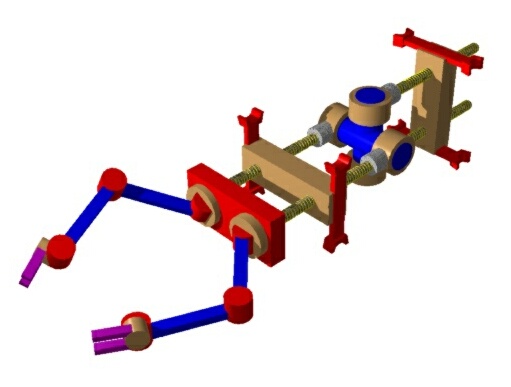

Figure 1. A motile parts-assembly robot. The outward clamps

in the rear engage the struts of the framework. Early generations

of robot have no on-board control but obey signals transmitted across the

framework.

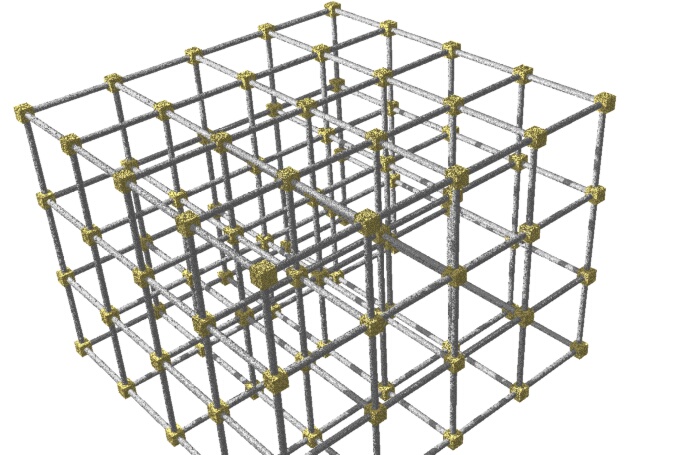

Figure 2. The framework. The bootstrap configuration

is a small framework, one assembly robot, and a pile of parts. The

robot builds parts fabricator and other robots and extends the framework.

Later robots, and framework nodes (the cubes joining the struts) made from

parts from the fabricator instead of the bootstrap stock, have onboard

computers. Ultimately the framework is a distributed control system

coordinating the activities of large numbers of robots, as well as the

physical infrastructure for the target product.

References

-

Burks, A. W., ed. (1966): Urbana: University of Illinois Press. Theory

of Self-Reproducing Automata [by] John von Neumann

- Drexler, K.E., (1992): New York: Wiley. Nanosystems: molecular machinery, manufacturing, and computation

- Freitas, R. and William P. Gilbreath. (1980): NASA CP 2255. NTIS

Order No. N83-15348. Advanced Automation for Space Missions

- Friedman, G. (1996): The Assembler: NSS/MMSG Newsletter, 4

No. 4 Fourth Quarter. The Space Studies Institute View on Self-Replication

- Jurrens, Kevin (1993): NISTIR 5335, National Institute of Standards

and Technology, Gaithersburg, MD. An Assessment of the State-of-the-Art

in Rapid Prototyping Systems for Mechanical Parts

- Merkle, Ralph C. (1992) JBIS 45, pp 407-413. Self Replicating Systems and

Molecular Manufacturing Web version

- Merkle, Ralph C. (1996) Nanotechnology 7 pages 210-215.

Design considerations for an assembler Web version

*Corresponding Address:

J. Storrs Hall, PhD.

Institute for Molecular Manufacturing

555 Bryant St. Ste. 253, Palo Alto, CA 94301 USA

tel 650-917-1120, fax 650-917-1123

E-mail: [email protected]; Web: http://www.imm.org/HallCV.html

|