Presenter

Natalie Dullerud

Natalie Dullerud is a first-year PhD student at Stanford University and recently received her Master’s from University of Toronto. She previously graduated with a Bachelor’s degree in mathematics from University of Southern California, with minors in computer science and chemistry. At University of Toronto, Natalie was awarded a Junior Fellowship at Massey College, and she has completed several research internships at Microsoft Research. Natalie’s research largely focuses on machine learning through differential privacy, algorithmic fairness, and applications to clinical and biological settings.

Summary:

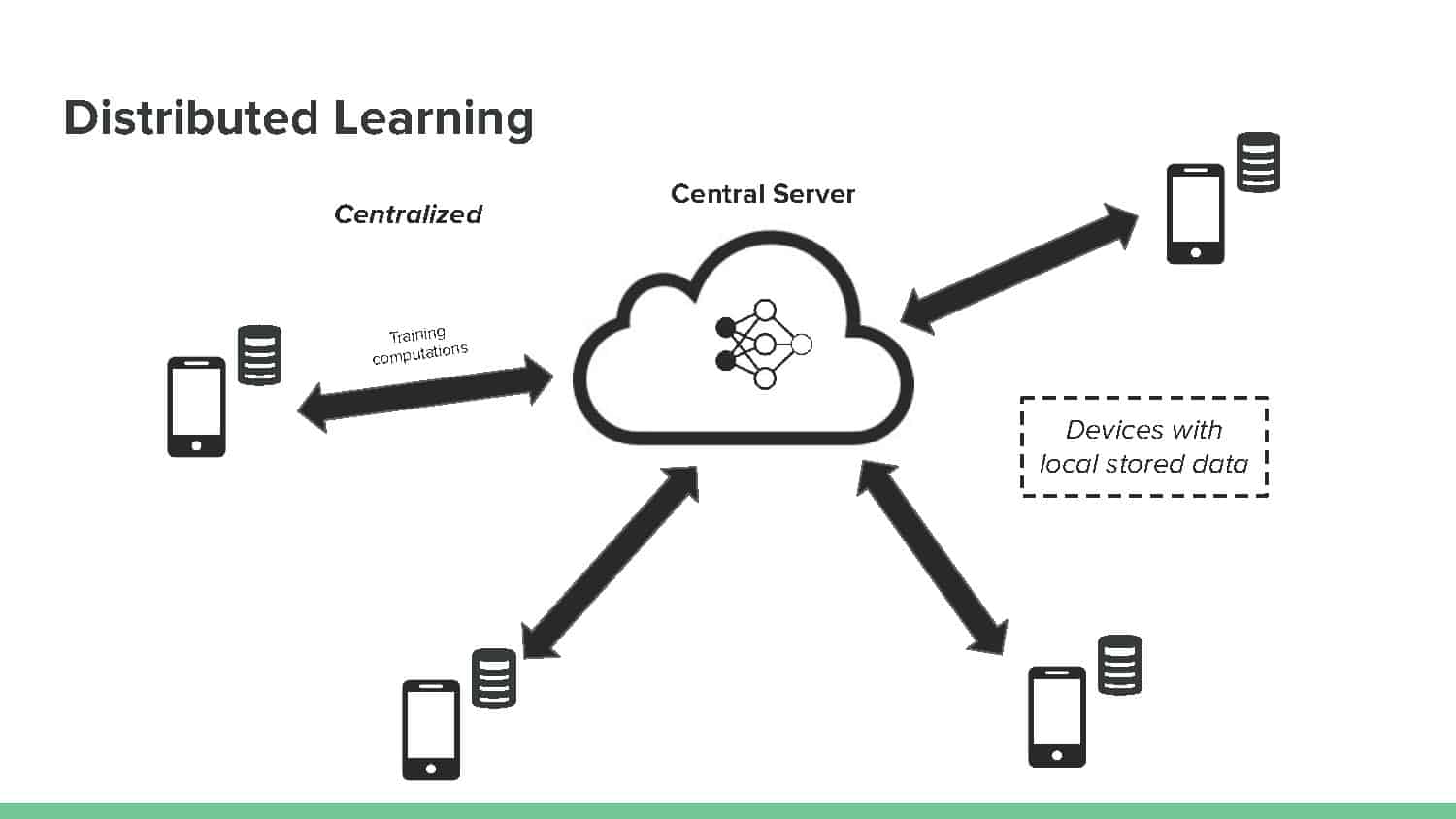

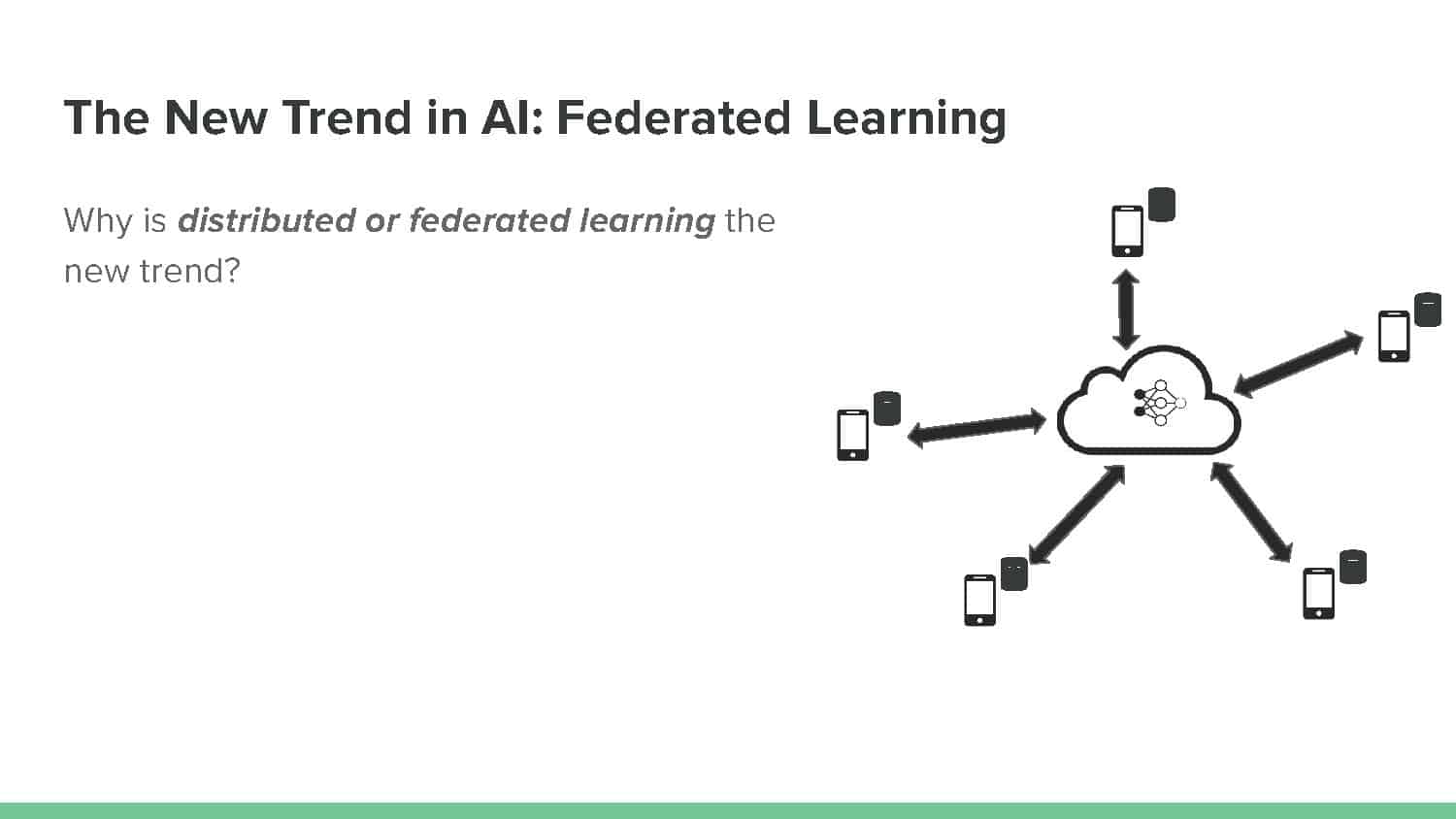

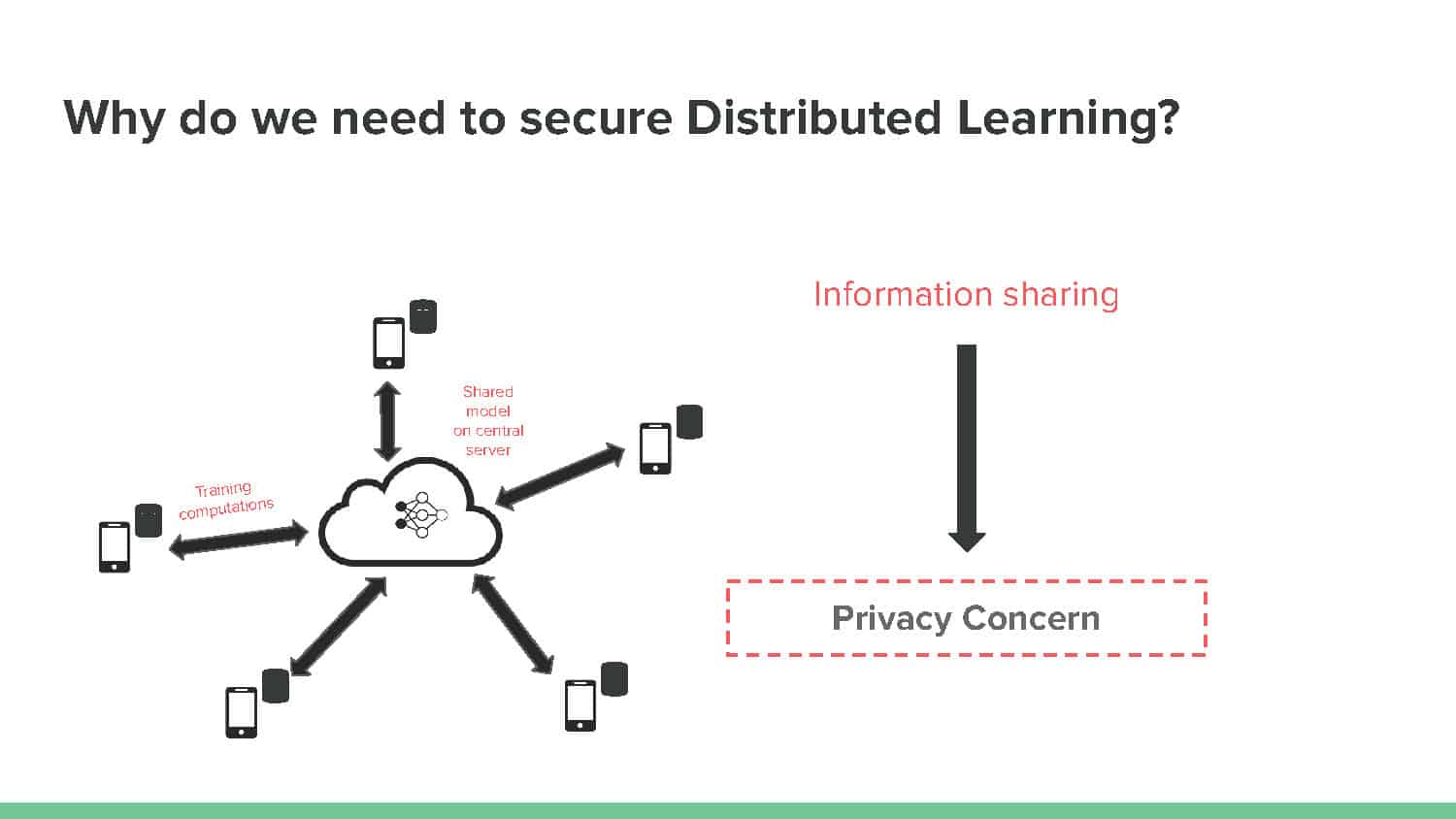

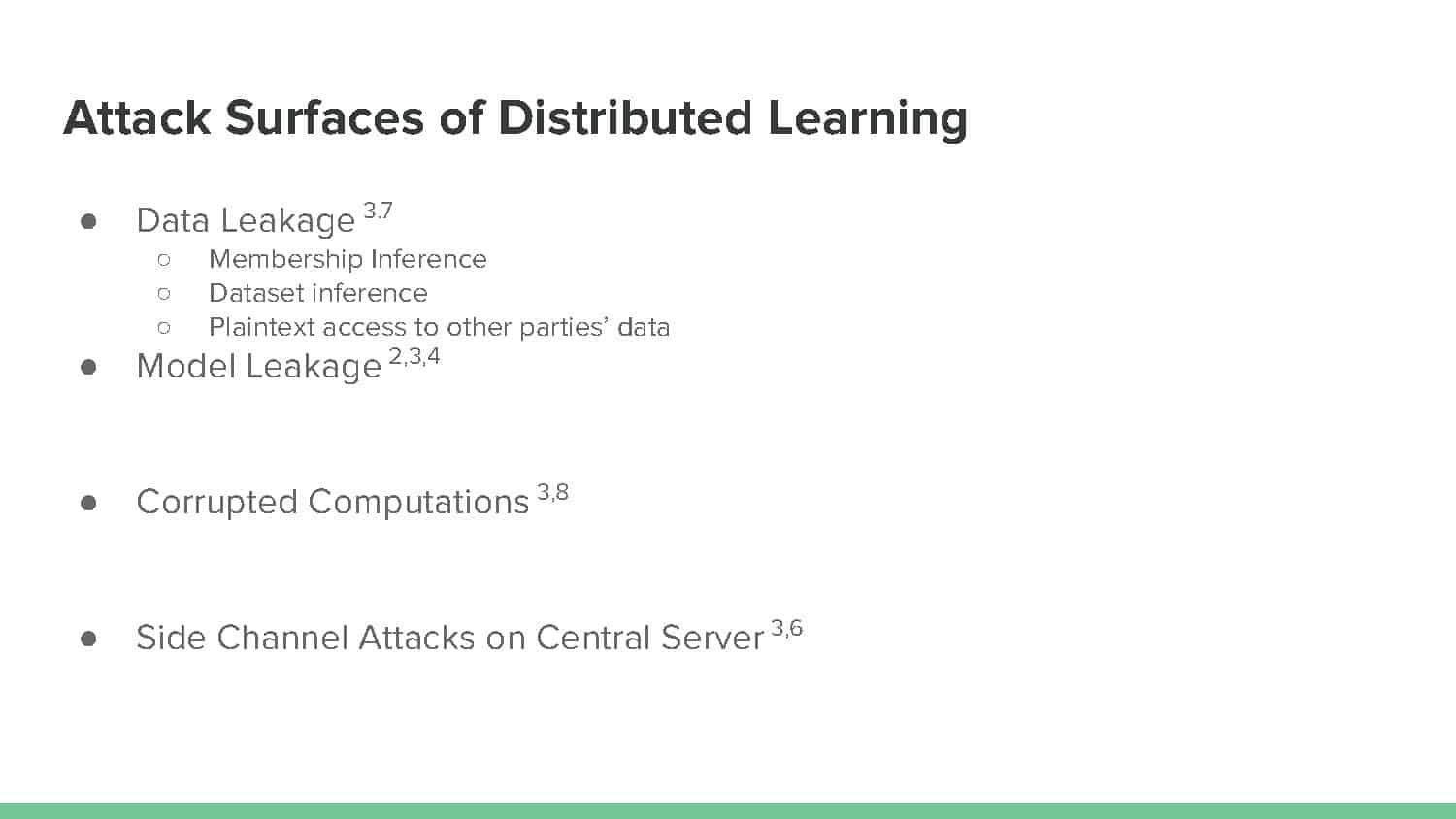

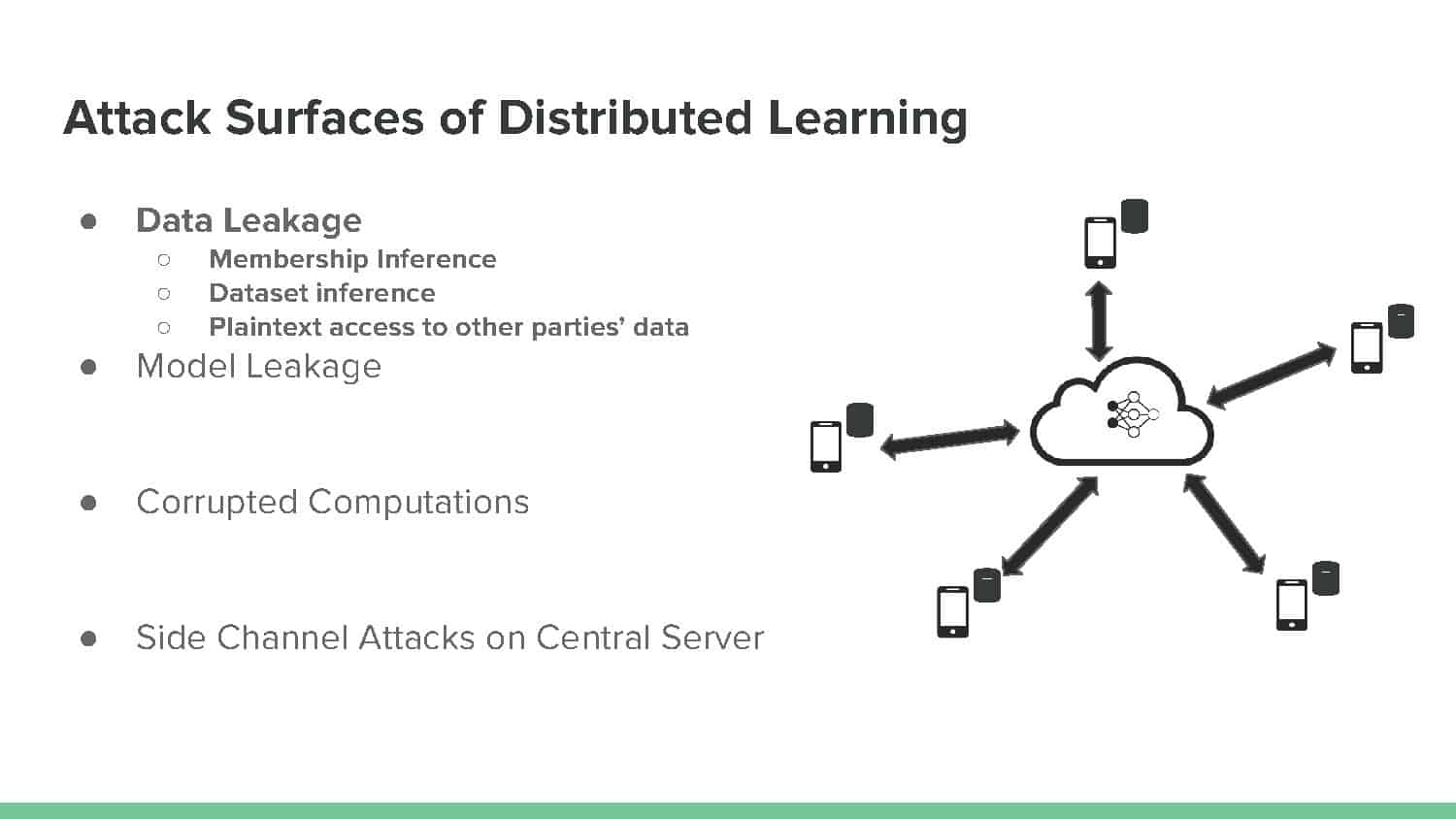

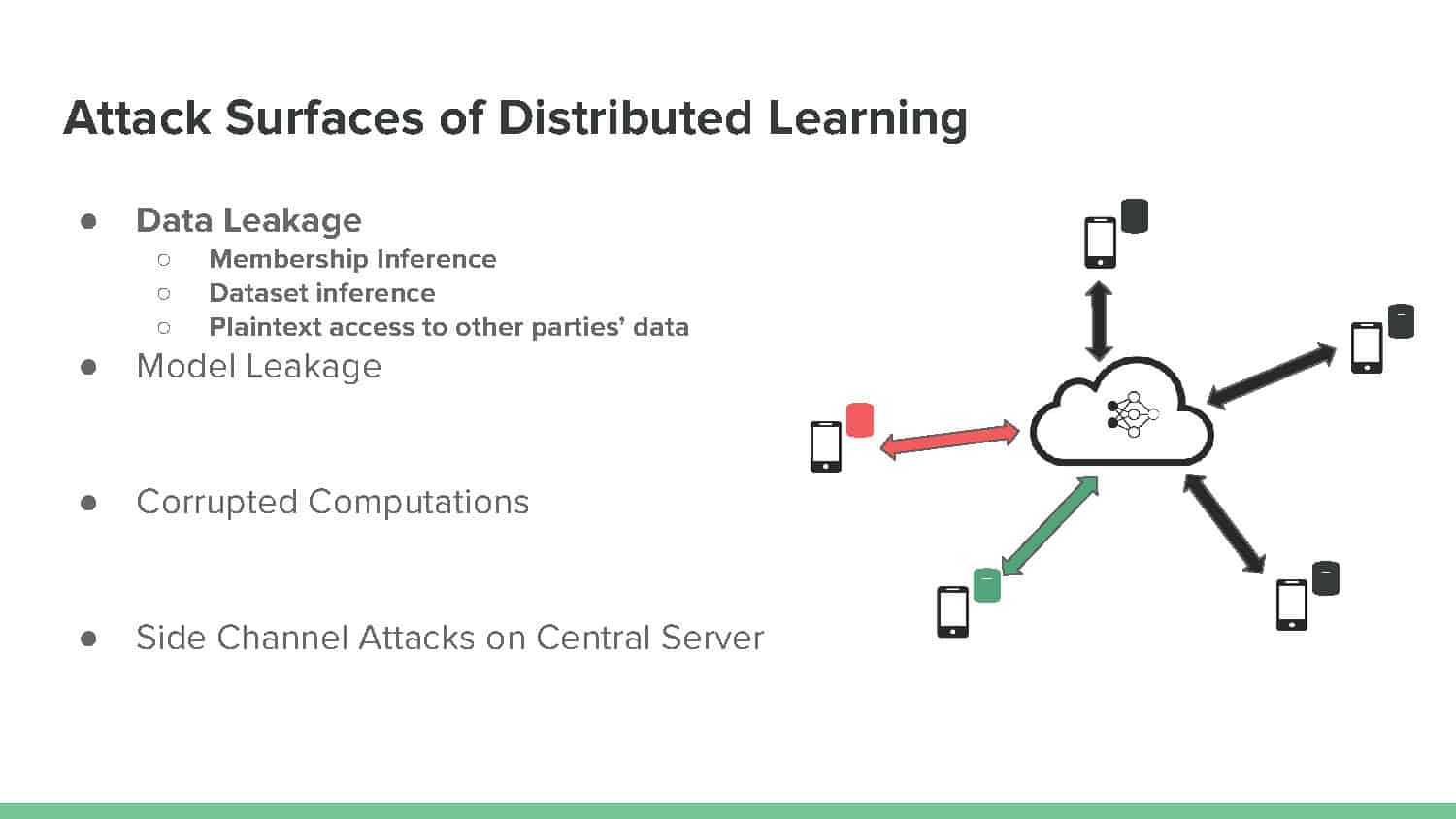

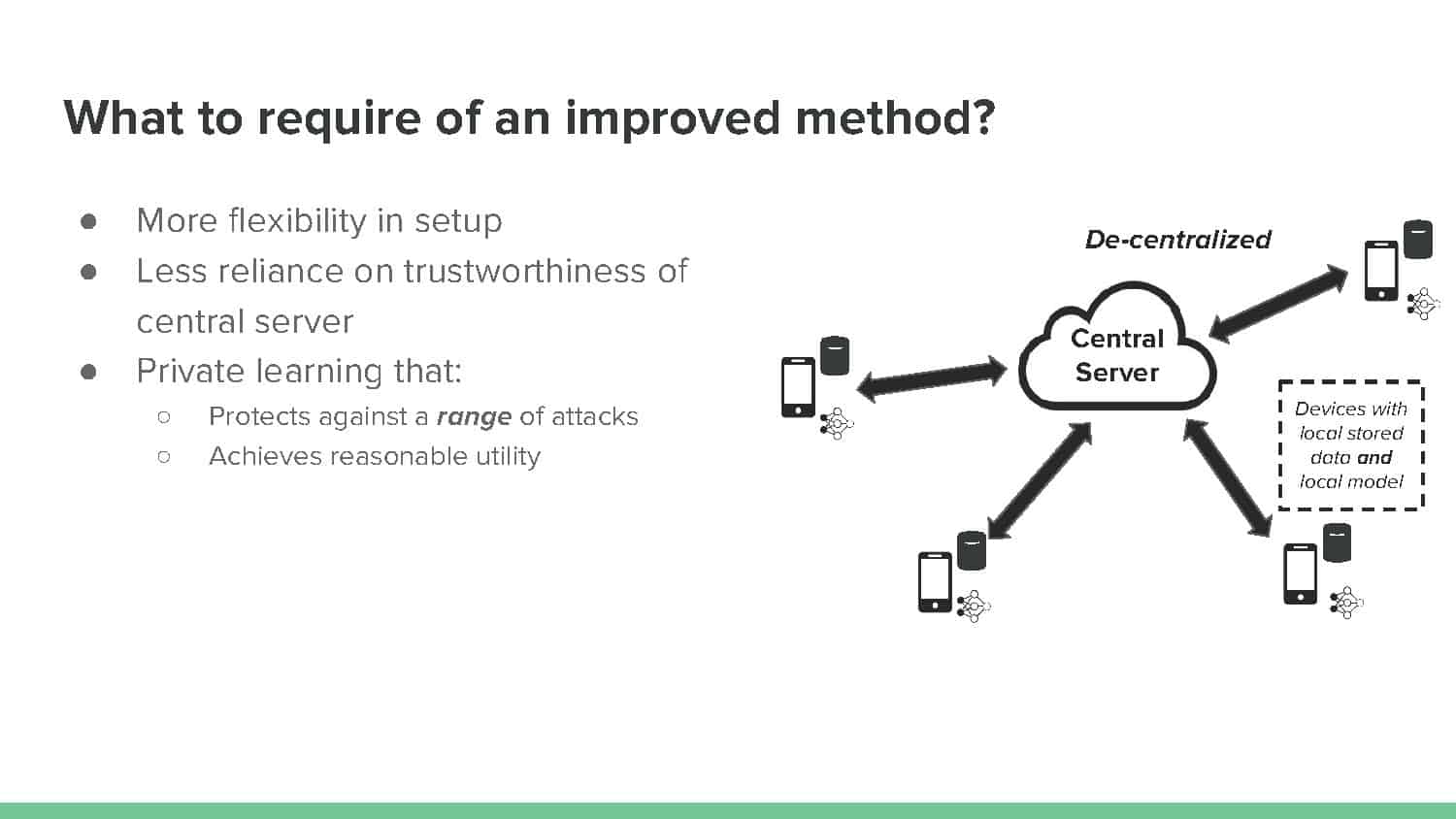

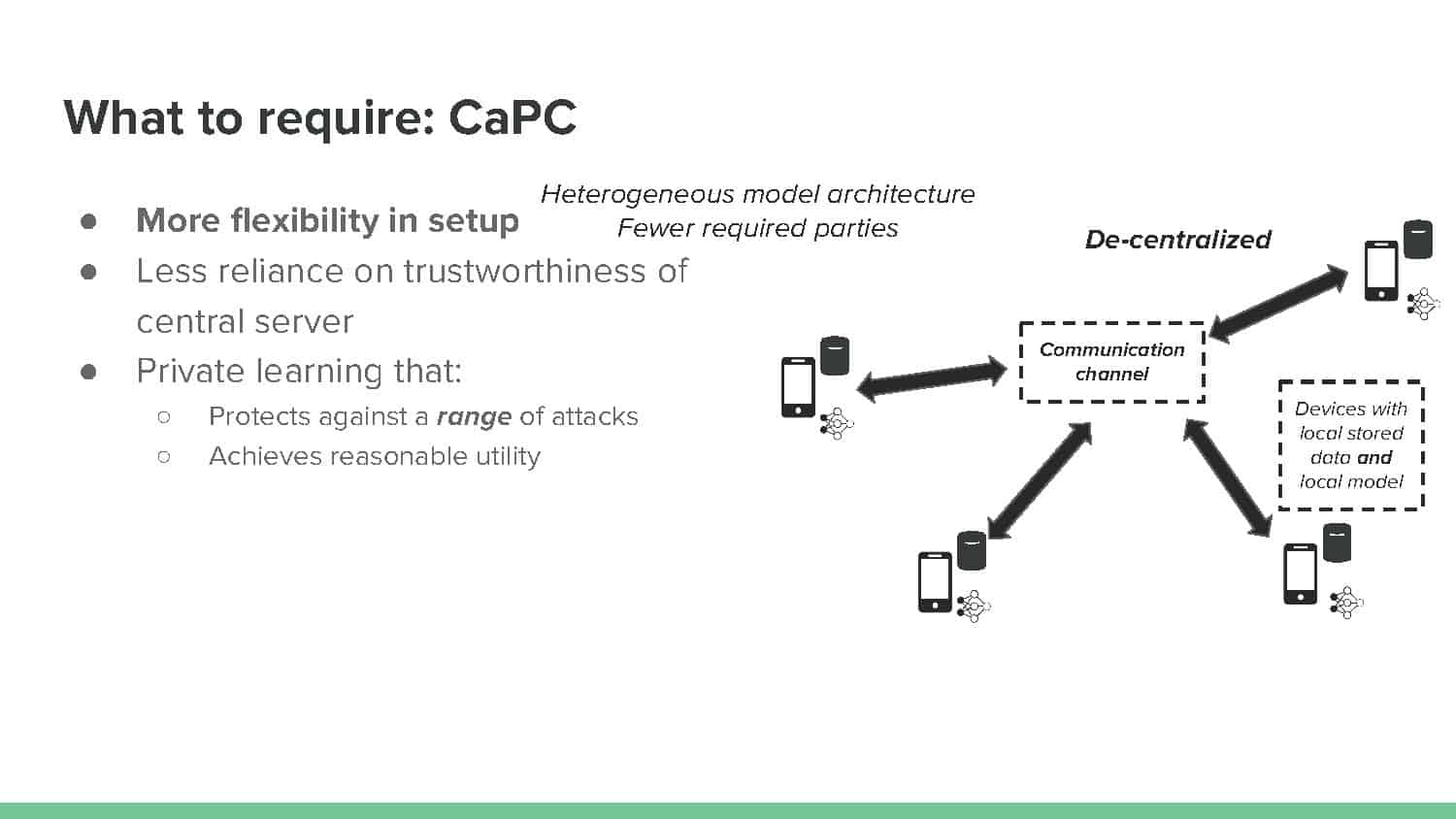

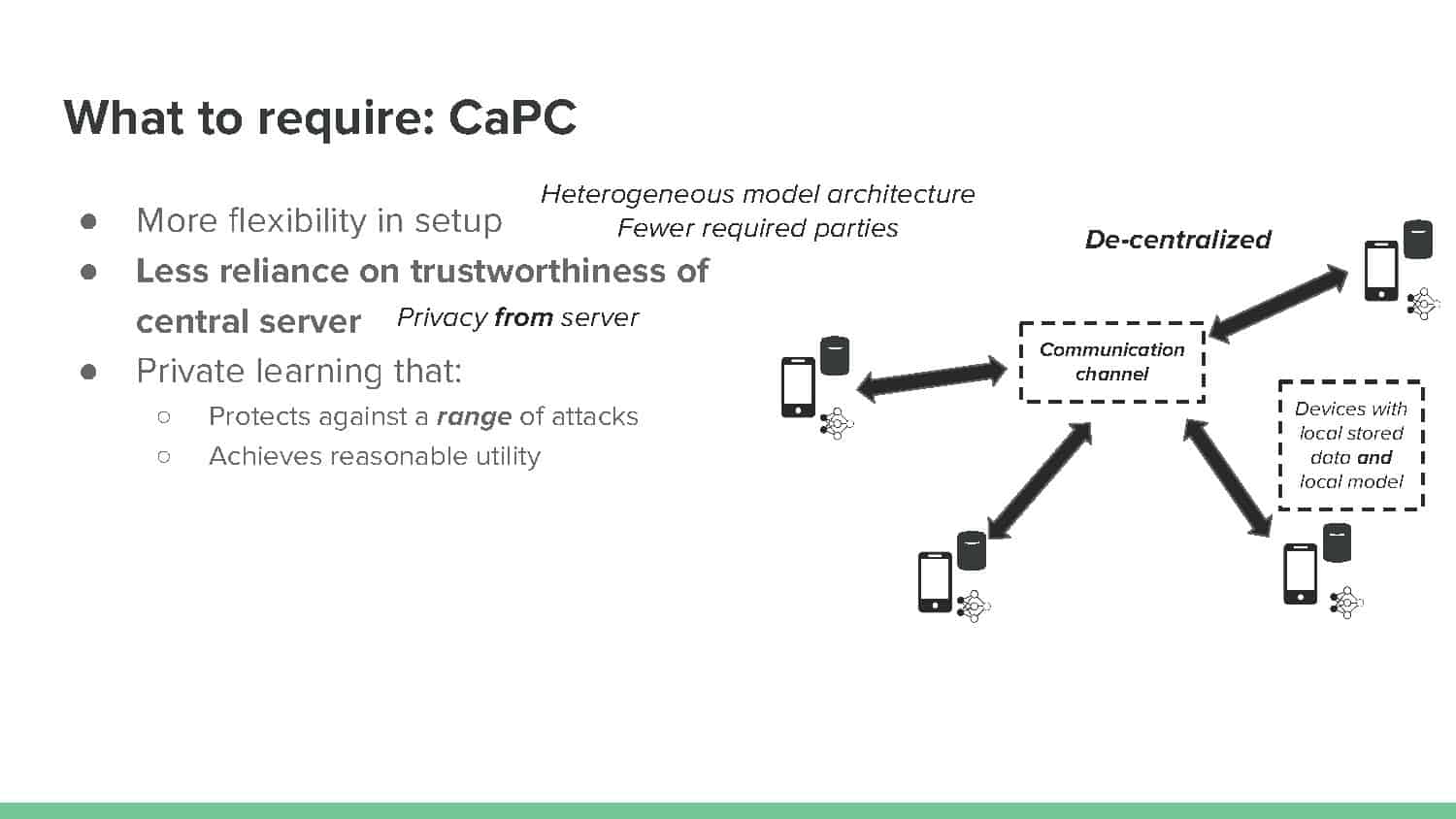

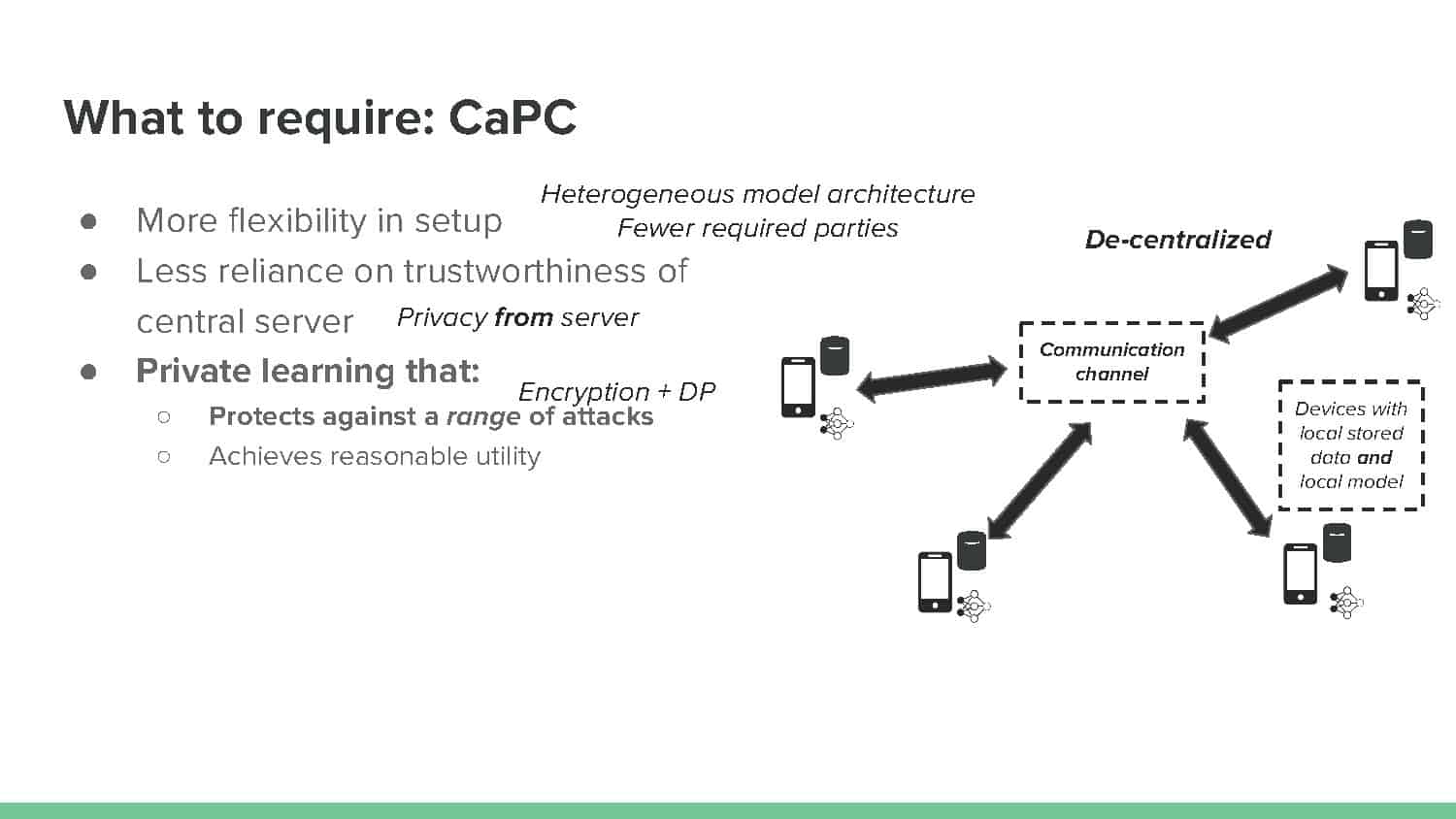

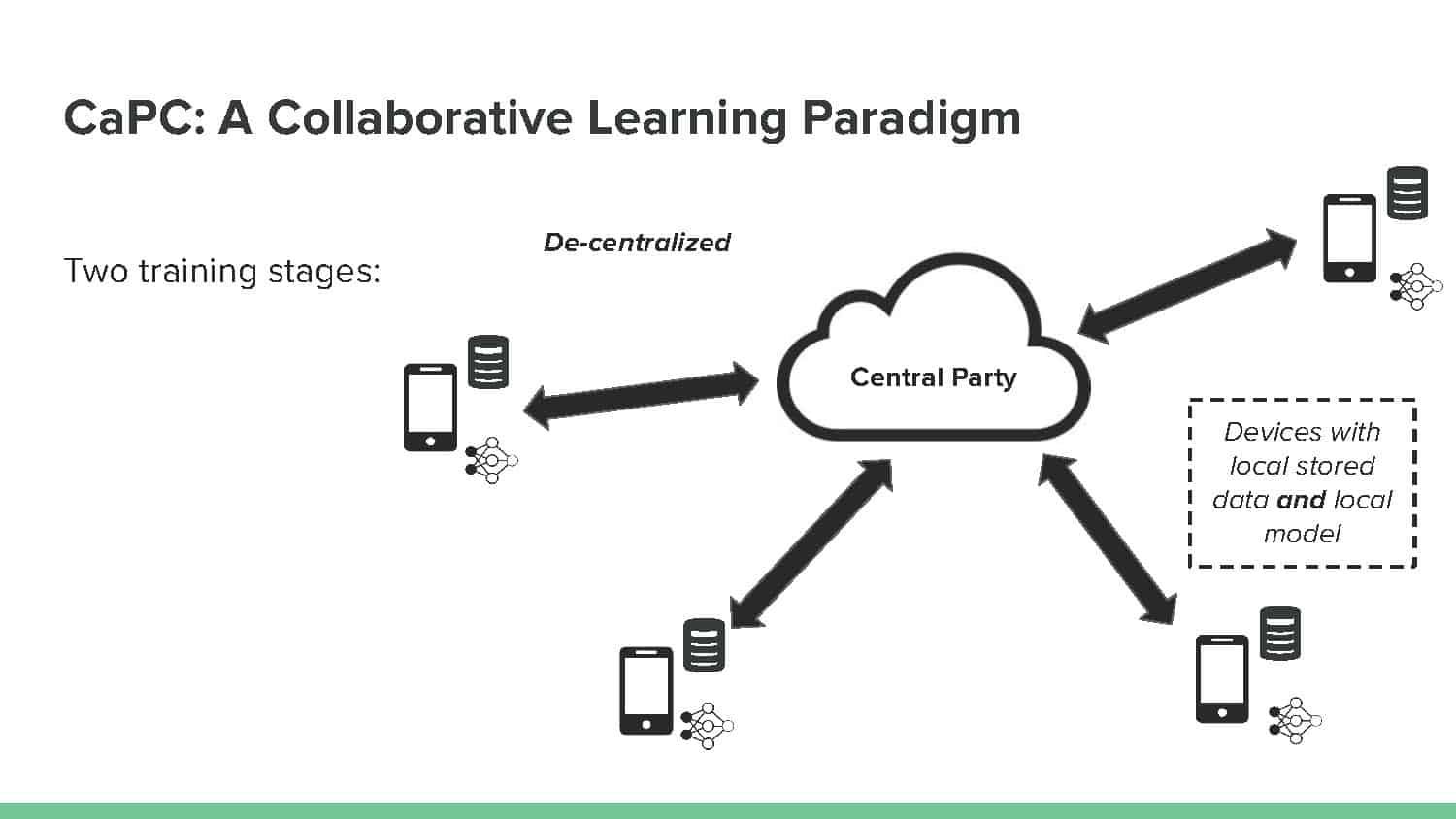

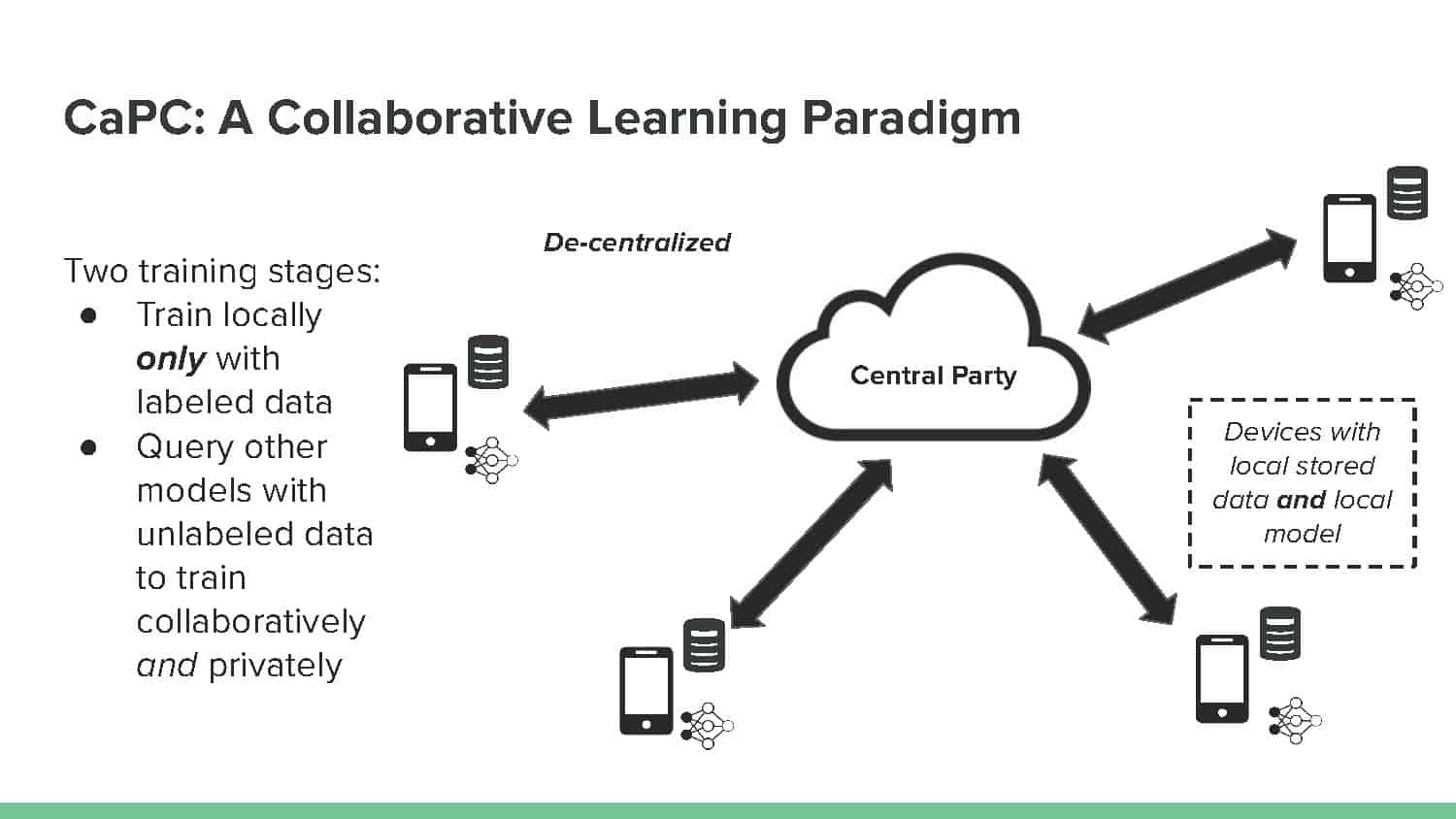

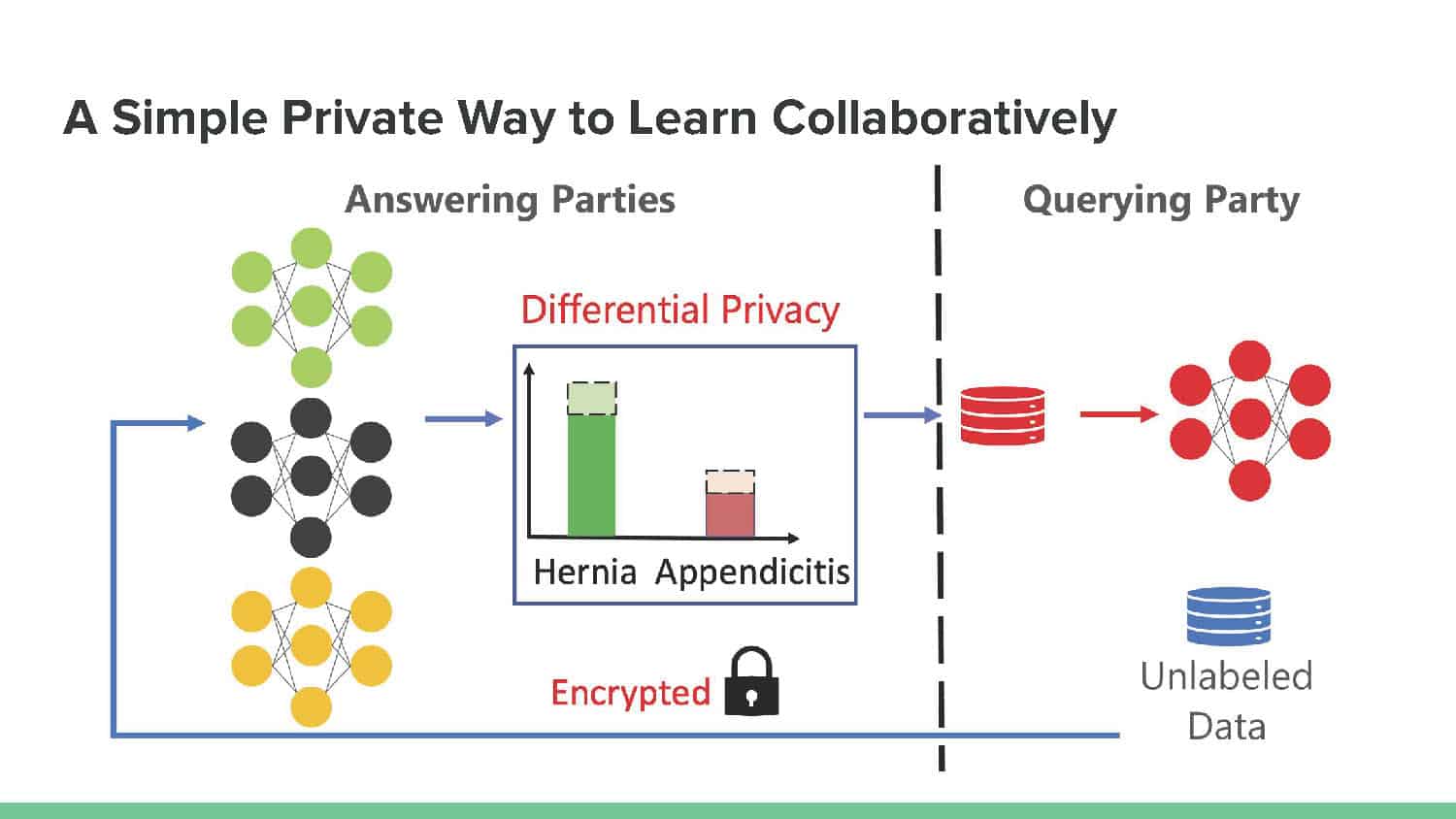

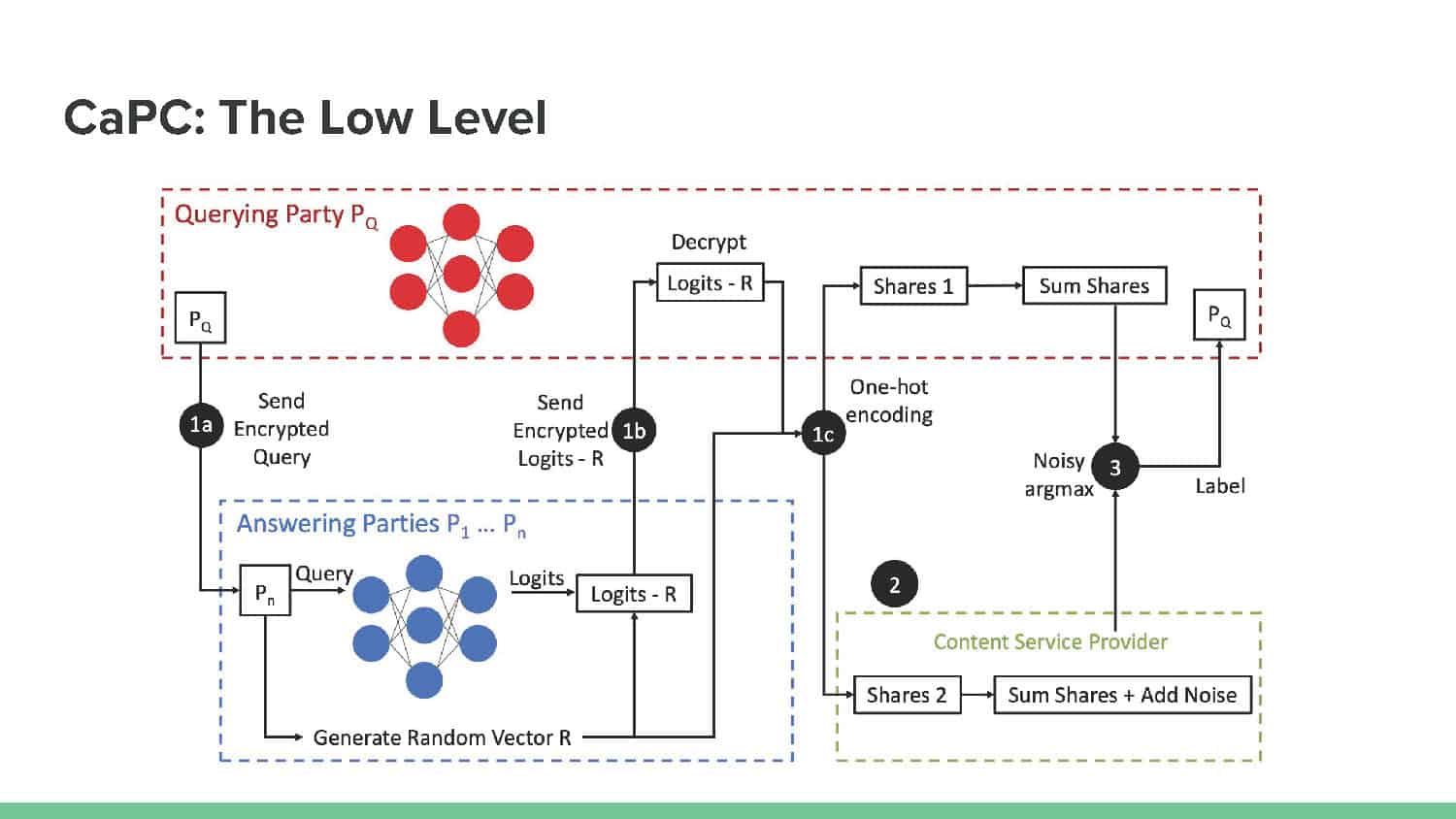

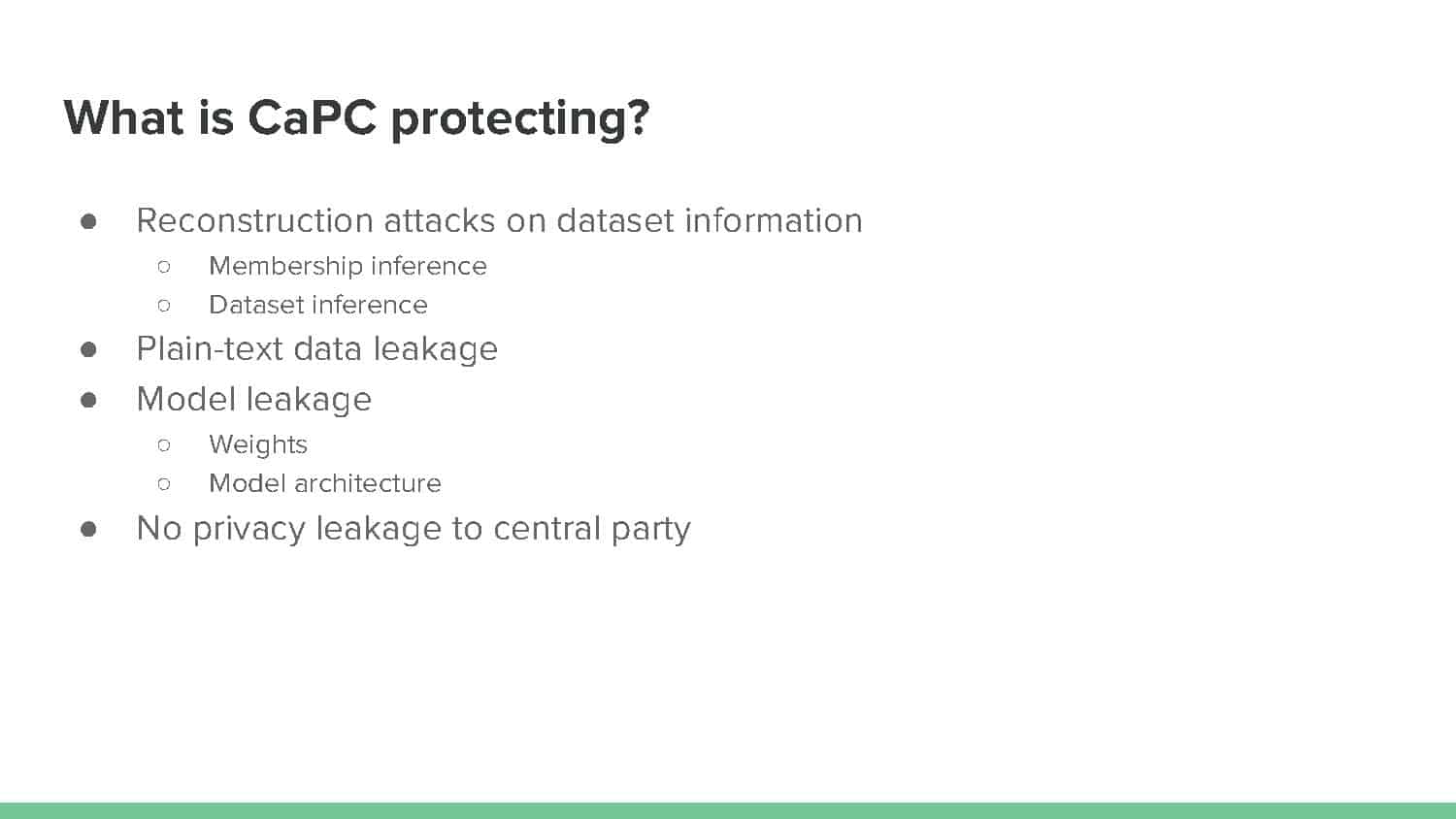

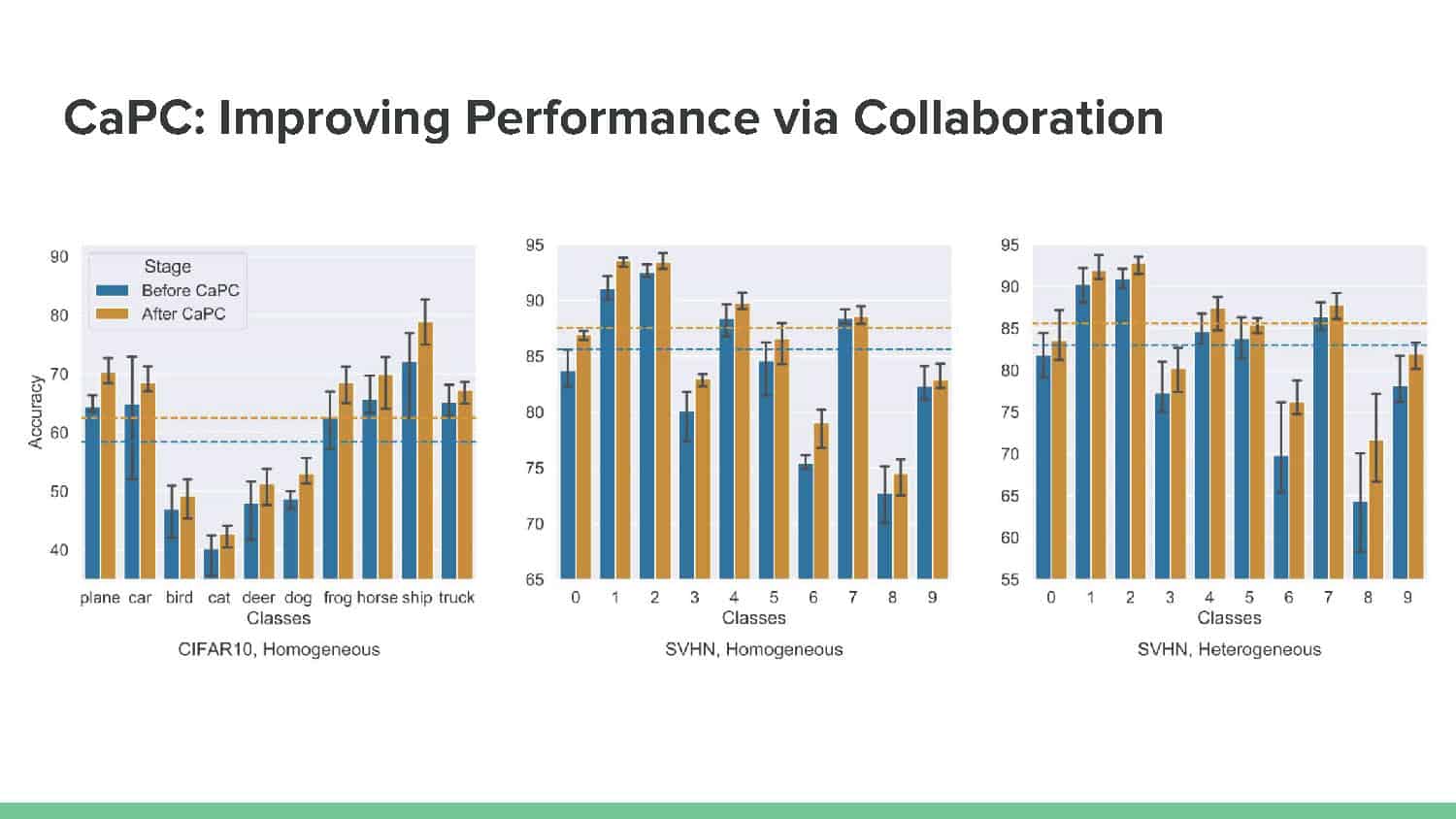

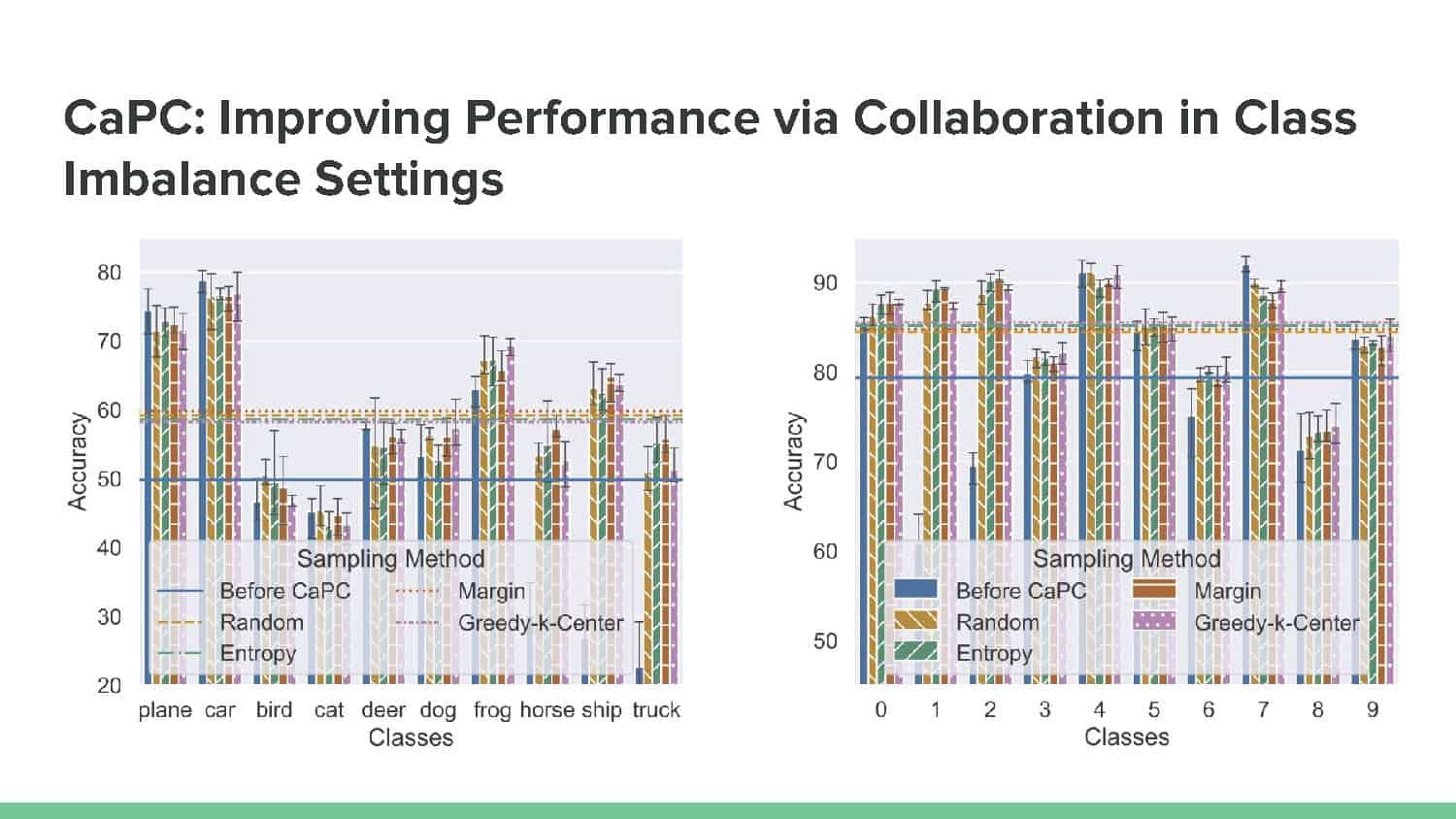

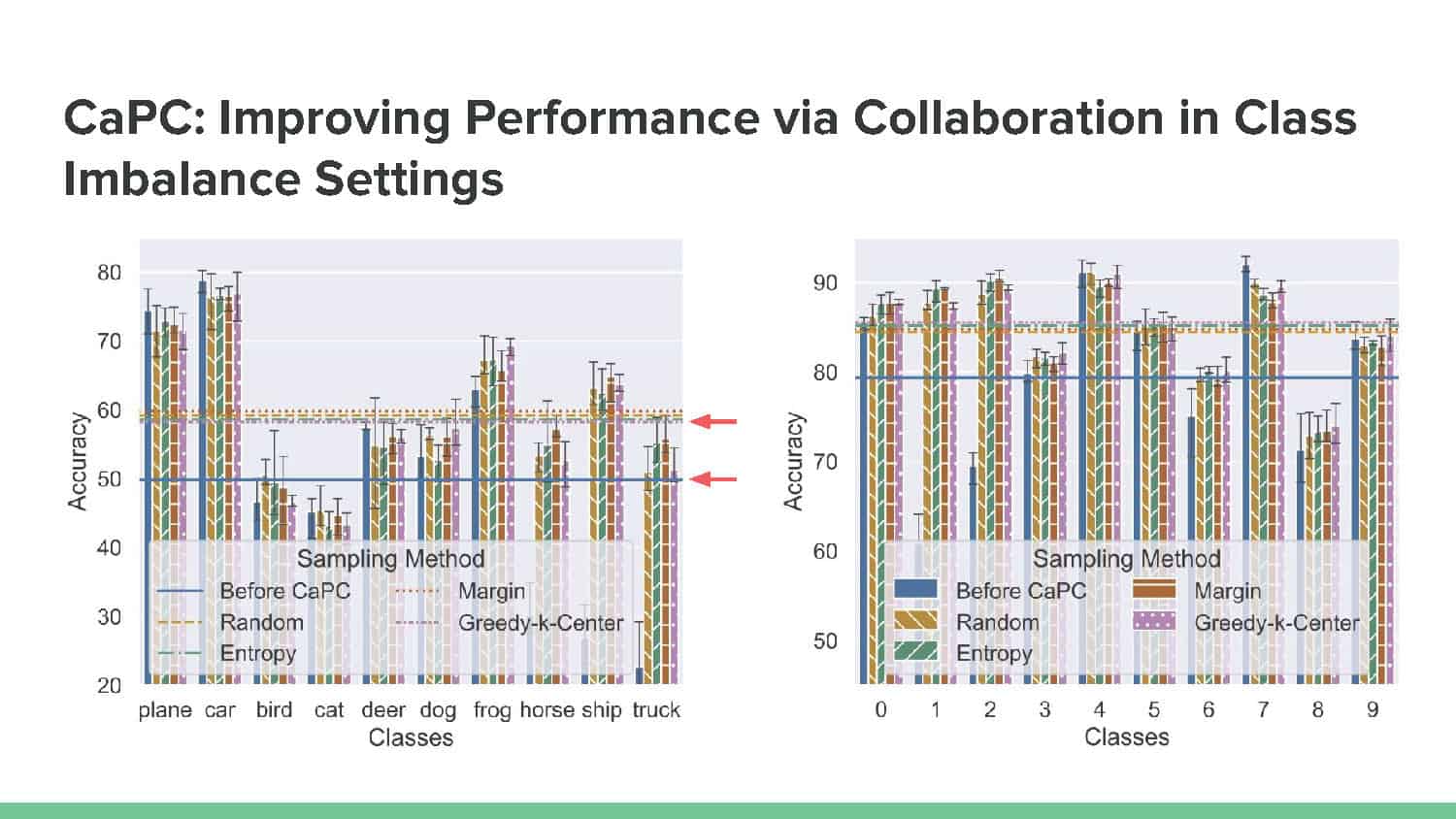

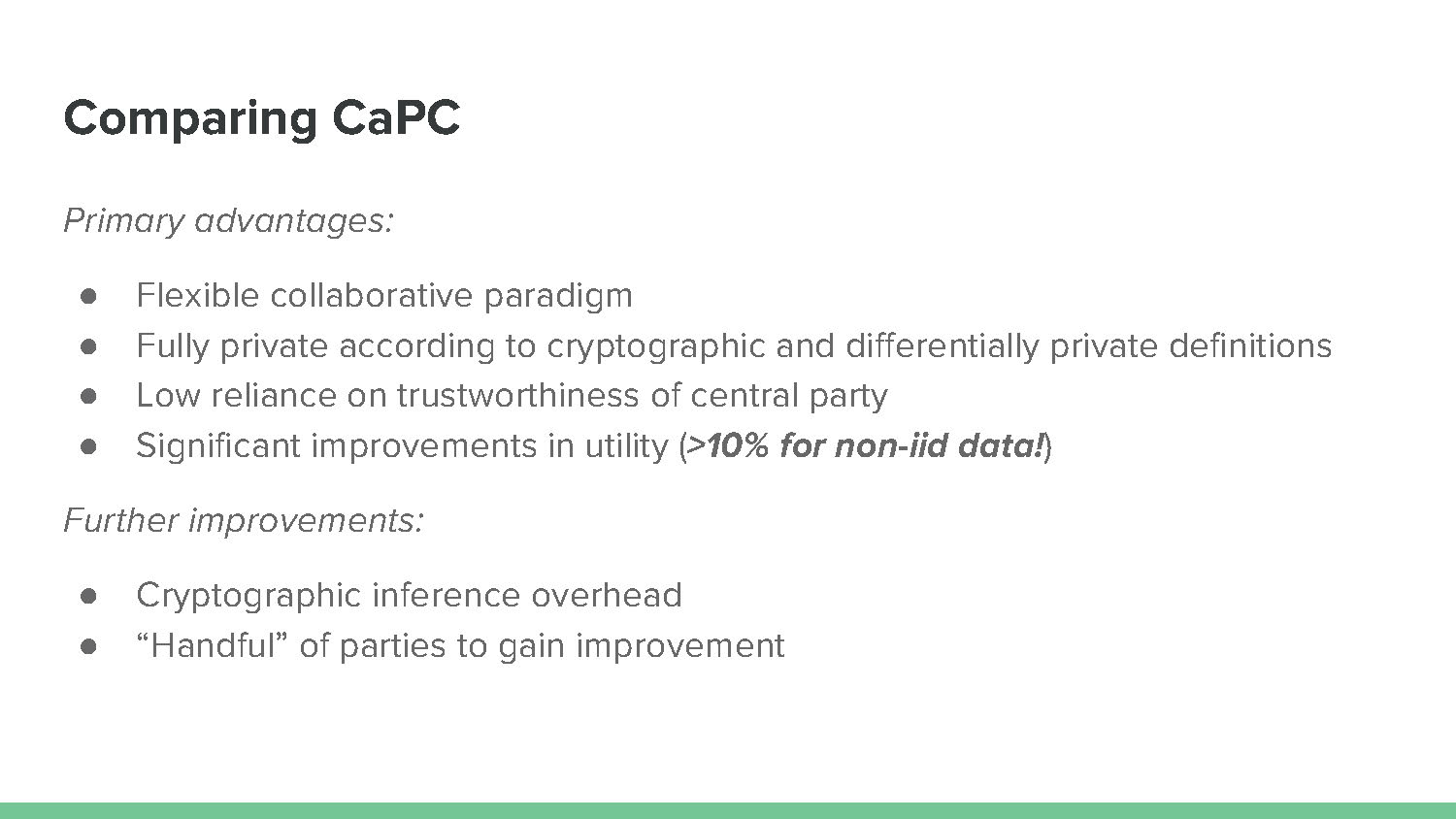

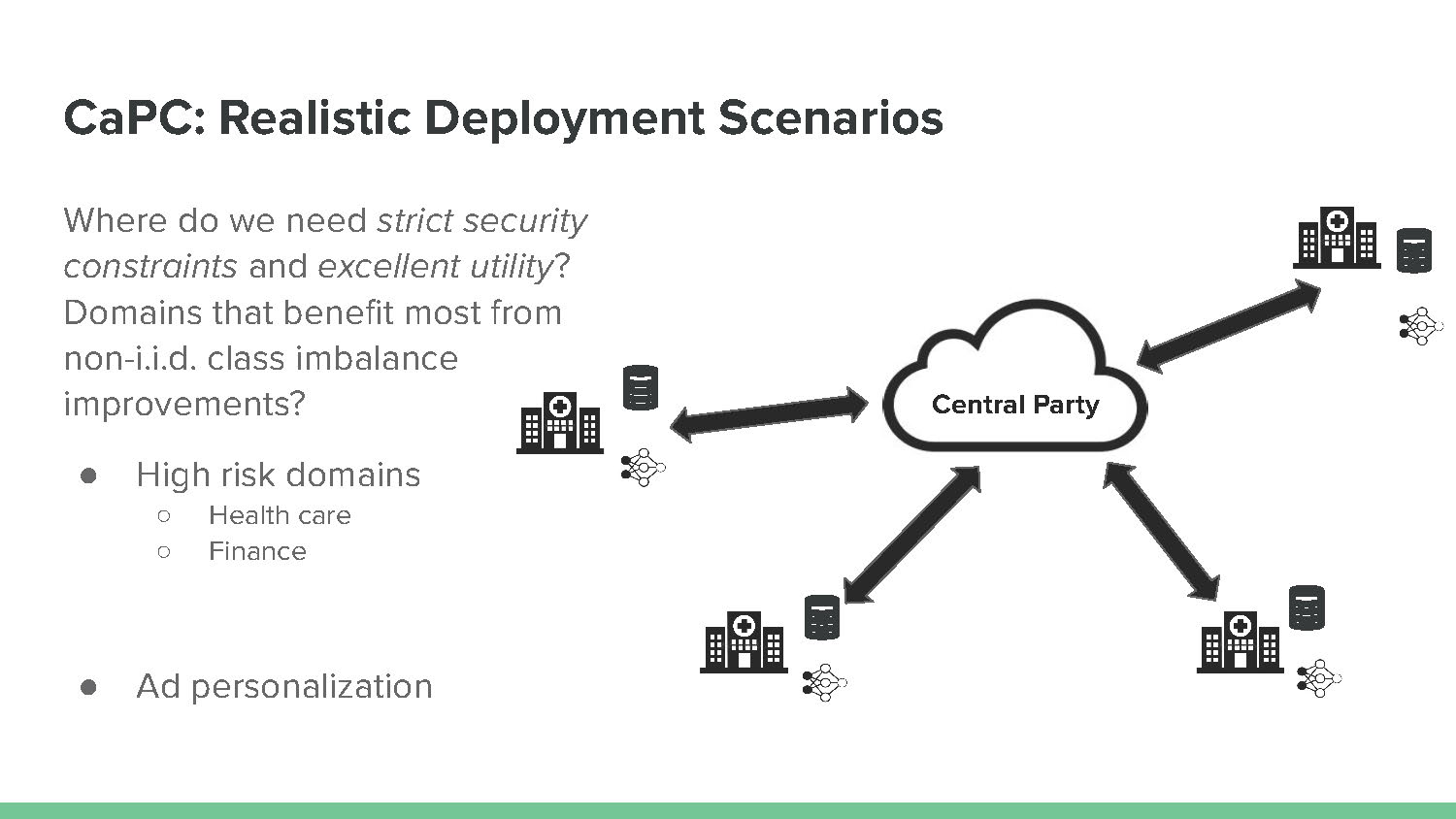

Machine learning benefits from large training datasets, which may not always be possible to collect by any single entity, especially when using privacy-sensitive data. In many contexts, such as healthcare and finance, separate parties may wish to collaborate and learn from each other’s data but are prevented from doing so due to privacy regulations. This talk will describe Confidential and Private Collaborative (CaPC) Learning, a recently published framework that allows entities to collaborate via privacy-preserving mechanisms in order to improve their own local models and maintain data privacy, from both a cryptographic and differentially private definition. The talk will encompass current definitions, concerns and techniques in the private distributed learning setting, then turn to the technical details and advantages to CaPC in practical settings. Finally, we will expound on future directions in privacy-preserving and confidential collaborative learning.

Challenge:

The primary bottleneck at the moment in the privacy realm, in applications of differential privacy to machine learning, and deep learning in particular, is the tradeoffs we see between privacy and utility (and privacy and fairness), particularly in settings that require both, such as learning on clinical data. Recent research has aimed at iterating on existing privacy methods in deep learning to attempt to mitigate this tradeoff such that the resulting model retains reasonable privacy guarantees and high utility. However, as many have said, differential privacy has not yet had its “AlexNet moment.” For differential privacy to become actionably useable, we have to simultaneously achieve good model utility.