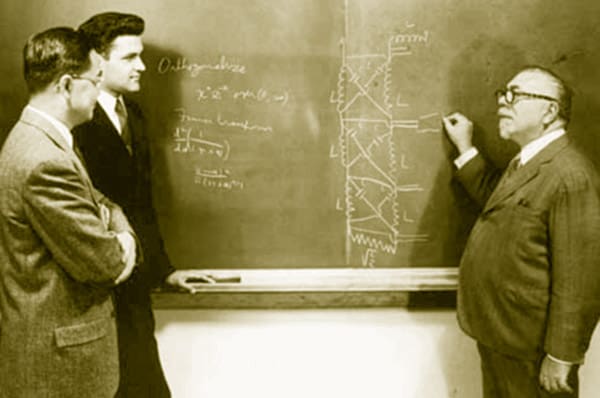

- 40s: Cybernetics, the notion the brain did logic in circuits, feedback

- 50s: the computer, stored programs, Logic Theorist

- 60s: LISP, semantic nets, GOFAI

- 70s: SHRDLU, AM

- 80s: AI winter, expert systems, neural nets

- 90s: robots, machine learning

- 00s: DARPA grand challenge level of competence

The main point of this post is to answer any objections of the form: you’ve been working on this so long, why don’t you have it yet? (Or perhaps, AI is the technology of the future and always will be. 🙂 )

One key thing to note is that cybernetics was the original line of inquiry that was going to let us understand how the brain worked and allow us to build smart machines. Many people assume that cybernetics failed since it more or less disappeared as a discipline. But in fact it learned some very key and useful insights, forming the basis of control theory and neuroscience; but it fell apart due to personalities in its cadre (a veritable soap opera between Wiener and McCulloch and Pitts involving Wiener’s daughter) and political disfavor in the US involving Wiener’s antiauthoritarian stances.

So GOFAI was born with a built-in bias against some of the insights of cybernetics. That has now been repaired; it was forced by the reintegration of control theory and the growing use of knowledge from neuroscience in the 90s, when AI robotics began to get serious. There are reasons AI floundered in the 80s, and that’s one — another is a diversion from basic research to applications before it was really ready.

Another point that is rarely made is that AI, the small sub-discipline of CS, isn’t the real major part of the work in the 20th century that will have led to intelligent machines. It’s the invention of the computer itself and all the work that’s been done to bring us the processing power we need to do the job, and the software to manage it and the complexity of human-comparable systems. And nobody could reasonably claim that that effort has been standing still, or has come to nothing, or anything even vaguely similar.

An AI will be a hardware/software network and system so complex and powerful that it will make the entire ARPANET of the 70s look like a toy — and it will have to manage its own internals completely automatically. I personally think that it will need the internal robustness that can only come from incorporating feedback and automatic resource management into the basic fabric of its computing platform. But that’s the kind of thing that can easily be done in a decade, once someone decides to do it. And it will be useful for a lot of other applications as well!