Summary

Today we’re discussing “From Value Diversity to Voluntary Cooperation”.

Upon investigation, we discover that values are quite diverse, even within an individual. Often our values are hard to determine with certainty at all! Is internal conflict a feature or bug? With so little insight into our own values and as our environment becomes more diverse, our values drift and behoove us to adopt strategies of voluntary cooperation.

In this session, Robin Hanson discusses Value Drift followed up by Mark Miller on Voluntary Cooperation strategies.

This meeting is part of the Intelligent Cooperation Group and accompanying book draft.

Presenters

Robin Hanson

Robin Hanson is an associate professor of economics at George Mason University, and research associate at the Future of Humanity Institute of Oxford University. He has a doctorate in social science from California Institute of Technology, master’s degrees in physics and philosophy from the University of Chicago, and nine years experience as a research programmer, at Lockheed and NASA…

Mark Miller

Mark S. Miller is a Chief Scientist at Agoric. He is a pioneer of agoric (market-based secure distributed) computing and smart contracts, the main designer of the E and Dr. SES distributed persistent object-capability programming languages, inventor of Miller Columns, an architect of the Xanadu hypertext publishing system…

Presentation: Robin Hanson: Value Drift & Futarchy: Vote Values, Bet Beliefs

Value Drift

- Ordinary decision theory separates values and beliefs about facts as the two components of decisions. The latter should change as you learn about the world, but your values are assumed to be constant, the anchor for decision-making.

- So in these contexts, the idea of “value drift” seems scary: they are supposed to be anchors!

- But then… what is Value Drift? Often when folks talk about values they aren’t talking about these core anchors, they’re talking about more shifty stuff that may be faction-based, or about symbols we’re coordinating around.

- You might say that human values haven’t changed: we get hungry, we get sleepy, we need shelter. But if you look at mission statements and law changes and the like, our values have certainly changed over the years.

- It’s commonly accepted that your human descendants will have values that differ from their ancestors: children branch out and relate to the world differently. And the values of people over just their lifetimes clearly seem to change as well. This is value drift.

- Sharing all the same values is not how we tend to keep the peace: instead, we use norms and laws. In the long run, this is probably a more robust strategy for coordinating people than attempting to hold values in stasis.

Futarchy

- Futarchy is an attempt at solving the generic governance problem.

- If you think of governance as “making good decisions”, you could say that what we really need to do to make good decisions is to set an objective, aggregate information about the consequences of possible actions towards that objective, then take the actions which would seem to best accomplish our goal.

- From that point of view, decision markets and prediction markets may work well for this. We can set objectives based on our values, then open markets for folks to predict the outcomes of potential actions.

- Most people who look at this say “Yeah that really might work well!” but then have no interest in actually trying it. Why’s this?

- Because governance is actually a way in which we struggle for power some win, and the rest of us submit and pretend that the winners were right all along. Because of that, we are very indulgent of people we give power to, and let them get away with a lot.

- This may be because we think we hold higher standards than we actually do. It’s an age-old observation that “we have kings, but they over there have tyrants”, usually to justify invading they over there. It’s mostly submission to the rulers and pretending they are more legitimate than they are.

- It’s always been possible to write abstract scripts about how to better govern, but in reality, we generally submit to those in power and pretend they are legitimate so they don’t crush us.

Presentation: Mark Miller: Paretotropism

- “Visualizing Voluntary Cooperation”

- WHen I think about the dynamic of cooperation, there are several diagrams in my head: I’ll share them here so we have a shared visual language to discuss them.

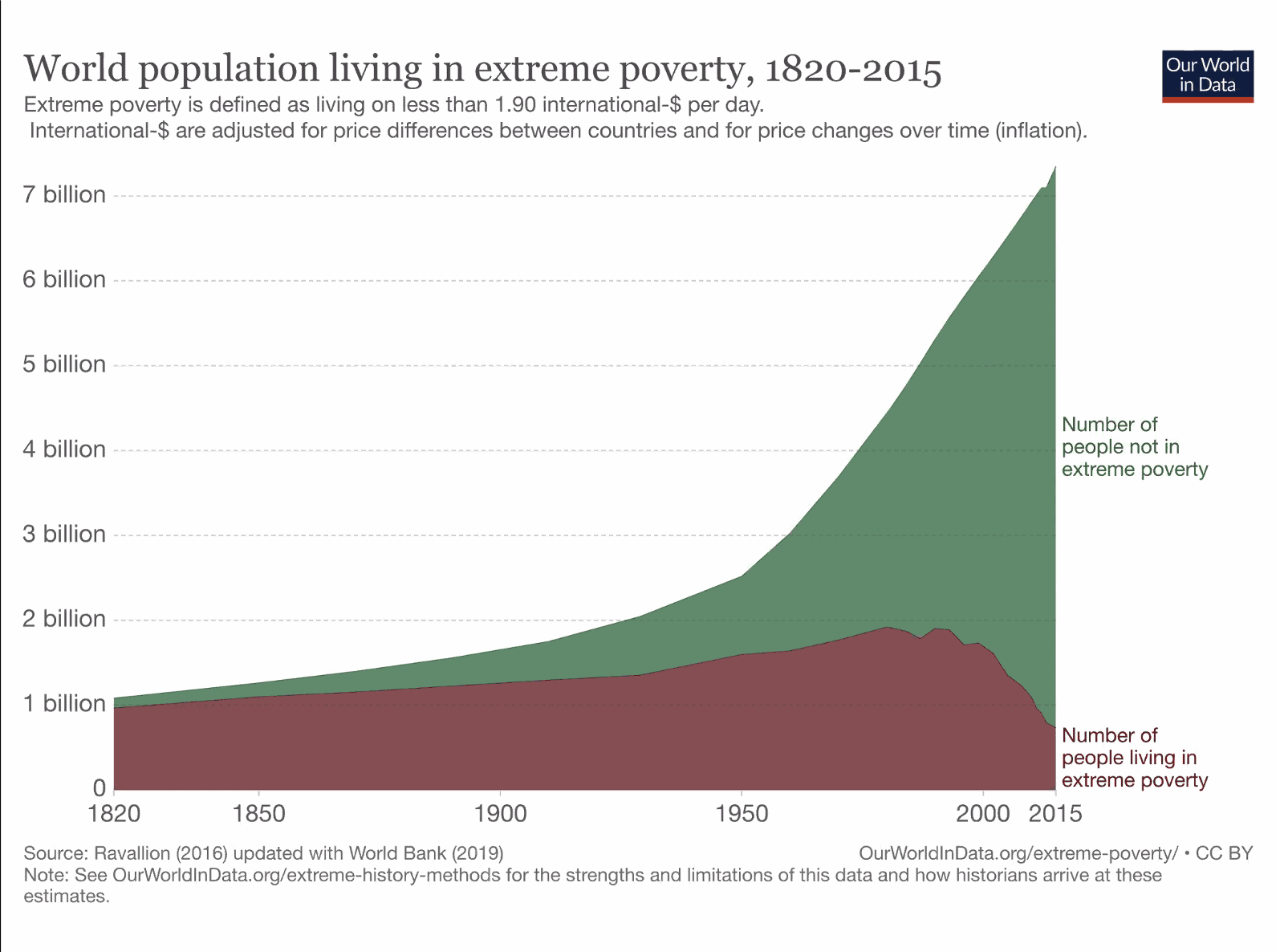

- But first: looking at the data, we’ve been very effective at getting people out of extreme poverty.

- As Steven Pinker says: We’ve been doing something right, it would be good to know what it is. This global civilization that has this dynamic is emergent from the choices we’re all making, so let’s look at these choices to see what we find there.

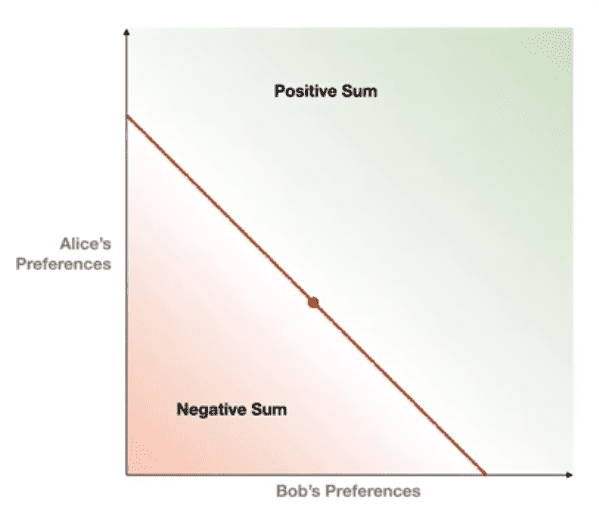

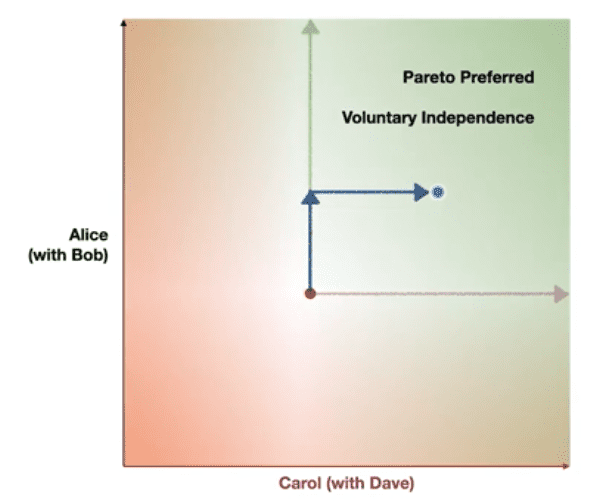

- In the 7 billion-dimensional graph of folks preferences, any particular decision deals with a small slice. Any particular interactions is bounded to a certain set of participants. So for any given interaction, a diagram such as this can be drawn:

- In the chart, the red dot is the state of the world. Y-values are “utility to Alice” and the x-values are “utility to Bob”. So Alice wants the dot to move up, and Bob wants it to move right.

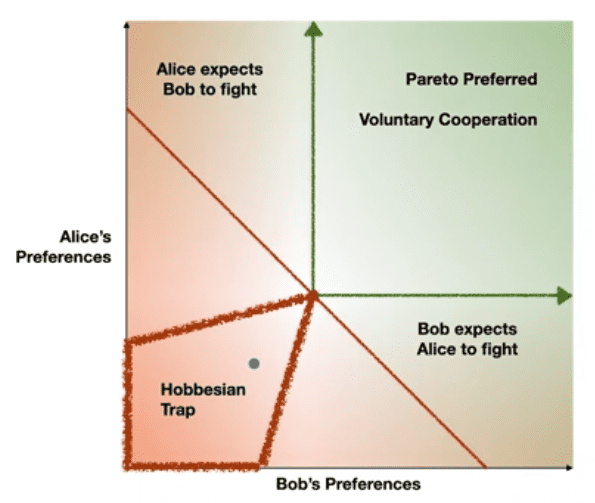

- You can separate the positive sum area into three regions:

- In the “Pareto Preferred” region, where voluntary cooperation resides, someone is better off and nobody is worse off, so there’s no reason for anyone to not go along with the plan.

- In the upper left and lower right, someone is MORE better off than the other is worse off, so each might expect the other to fight for a different outcome.

- This mutual expectation to fight creates the Hobbesian trap: both are preemptively expecting a fight, creating First Strike Instability (see: the Cold War).

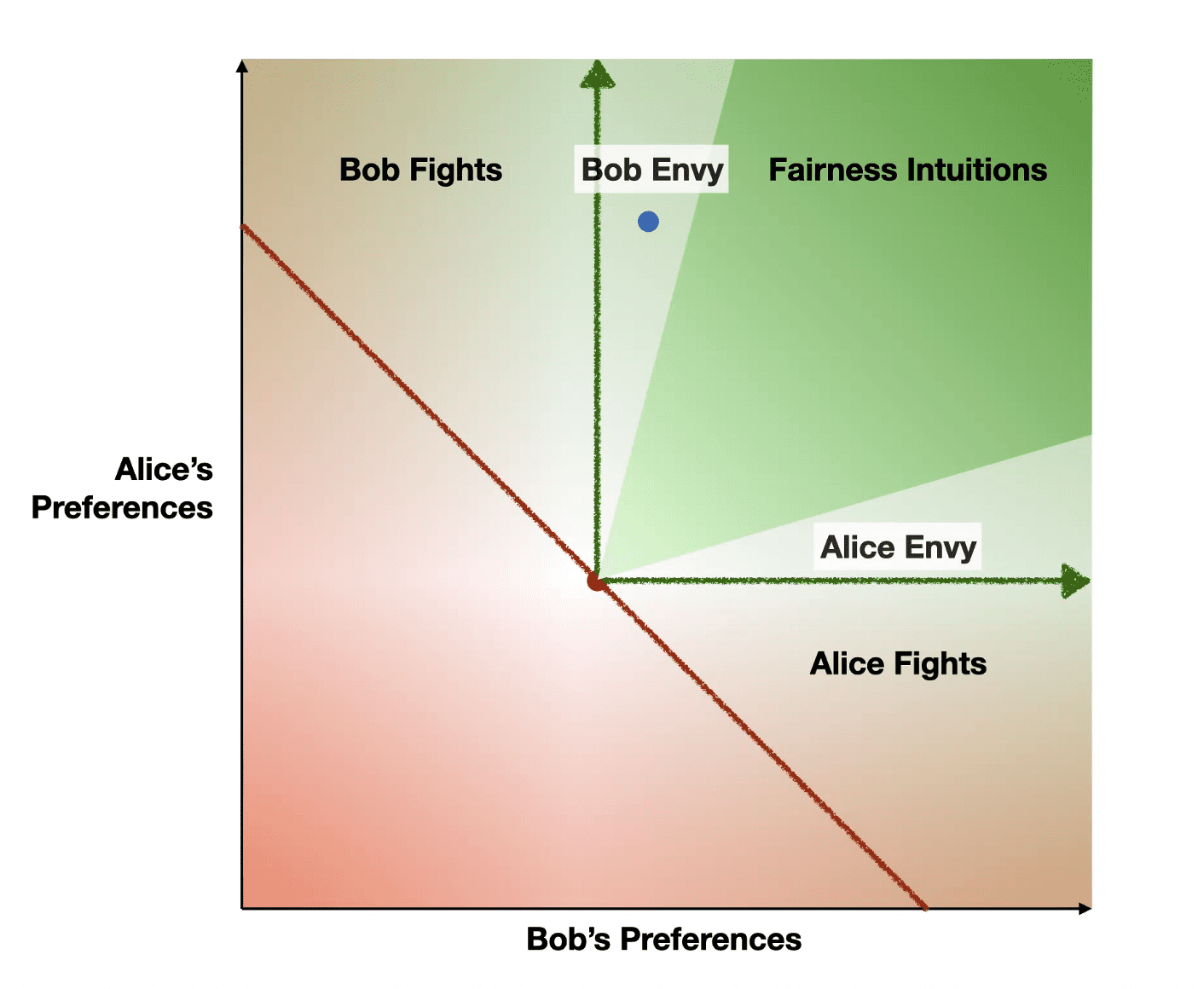

- The Pareto Preferred region itself can be separated into three regions:

- In the top left and bottom right, one person is making out quite well while the other gets crumbs. From the literature we predict that even though the smaller winner is technically winning, they will fight that outcome out of a desire for fairness.

- We are entering a world where we are coexisting with AI descendants that are totally different from us. So utility was already questionable as a metric, and once the AIs are on the scene it will make even less sense.

- A better metric may be “Revealed Preferences”: as long as the agents in the system are in any way goal-seeking, then their actions should be informed by these goals and will reveal a ranking of preferences.

- Reorienting the chart around revealed preferences renders the concepts of “negative-sum” and envy less coherent, but the green cone central to the upper right will still represent the sweet spot for what Alice and Bob can accomplish by making deals.

- Alice and Bob can reason about each others preference ranking and hold out for good deals.

- Keep in mind: the vast majority of folks interacting in the world are totally ignorant of what almost everyone else is doing. They are all independently working to improve their standing, and thereby you end up with a general march into more preferred territory.

- How can Alice and Bob move into the preferred area?

- Nash Equilibrium Trap: individual players can’t make incrementally make moves towards the world we all prefer, because there are intermediate states where one or more is better off and lose the incentive to cooperate

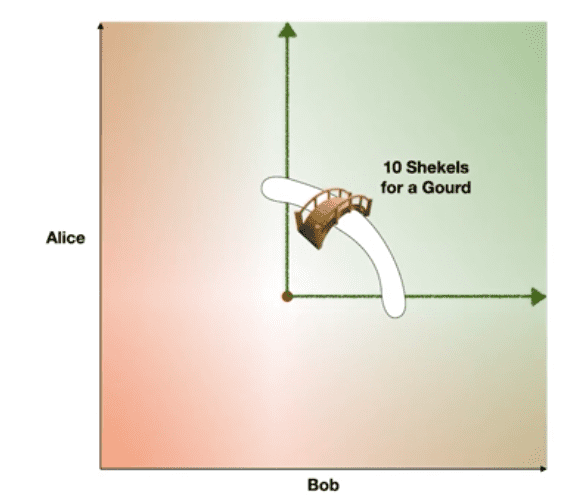

- How to build bridges across the collective action problem into Pareto Preferred space?

- Technologies like escrow, reputation, contracts and the rule of law, Alice and Bob can arrange to cross the bridge together into the world in which they’re both better off.

- Blockchain technologies have brought in an entirely new toolset to work on these problems.

- Tropism is a tendency to grow a certain way: plants have phototropism in that they grow towards the light. So through our various tendencies and technologies for cooperation, we seem to have a sort of paretotropism as a civilization.

- How can we shape tropisms to guide humanity into cool futures?

Q&A

I have a question about futarchy: I’ve read the futarchy mini-paper/webpage, admittedly not the longer paper… separating voting on values, betting on beliefs mostly makes sense up until the point where I try to understand what we would be betting on and how it would be evaluated. Prediction markets I’ve participated in have been very low-resolution, but most implemented policies seem broad, and evaluating their success/failure seems to require higher resolution. Could we have an example futarchy workflow for a presumed example policy, from voting to betting to resolving bets?

Question for Mark Miller: Who coined the term “paretotopia”? Eric Drexler has been using it since I first met him ~3 years ago, and I just assumed it was his term.

- I no longer use “paretotopia” because it implies a state that can be reached, instead I use paretotropism, a dynamic, a motion.

If everyone is gonna be wayyyy better off, will folks have the same fairness intuitions, as opposed to inequal futures?

- Yes: if we are in a place with much to gain by all, there’s more focus on cooperating than sniping, but there are a lot of other factors in the mix.

If we assume that Alice and Bob have unequal bargaining power, how does that affect your diagrams? Do we need more complex charts?

- Seeing those diagrams would be interesting.

I’m confused by your talk: what’s your claim Mark?

- A: We should be looking for ways to amplify and accelerate cooperative ability. New tech can unlock this.

Whether or not futarchy works can be tested. Whether I think they can work matters to motivate the creation of tests. Confusion comes in: how can we tell when a policy achieved its goals?

- The organizations with the most solid agreement are for-profit companies. There’s a stock price that represents the future value of this organization.

- Example: Should we replace the CEO of Firm X?

- The stock price fluctuates based on willingness to purchase at different prices.

- Two scenarios: we keep the CEO, or we dump her. One market trades on the “Keep CEO” assumption and will be called off if she’s dumped. One market trades on the “Dump CEO” assumption and will be called off if she’s kept.

- The market that bets the value of the company will be higher shows which action should be taken. This isn’t a perfect thing, but might improve over the status quo.

Personal commitment and human honor and integrity are ancient coordination technologies that should not be ignored

Will AIs have envy?

Irrational people exist… so how does that jive with the Pareto case?

- How do others’ states affect my states? The diagram depends on the independence of actors. So we are not accounting for negative-sum thinking on purpose.

Done any research on the merging of players? Ex: DAOs can coordinate with other DAOs. Have you looked hard at “Alice and Bob negotiating with Mary and Dave”?

- This is pretty much the same problem with more variables, apply combinatorial reasoning

My utility function is complicated and contains contradictions. And utility functions are coupled in both positive and negative ways: I’ll take a negative result if it makes my son better off. How does this weigh-in?

- These graphs are distilled to attempt to get at some constrained truths

Can I sniff the behavioral exhaust to determine where I should drive next?

Links:

- Robin Hanson on Value Drift: https://www.overcomingbias.com/2018/02/on-value-drift.html

- Robin Hanson on Futarchy: https://mason.gmu.edu/~rhanson/futarchy.html

- Poverty decline data: https://ourworldindata.org/the-global-decline-of-extreme-poverty-was-it-only-china

Seminar notes by James Risberg.