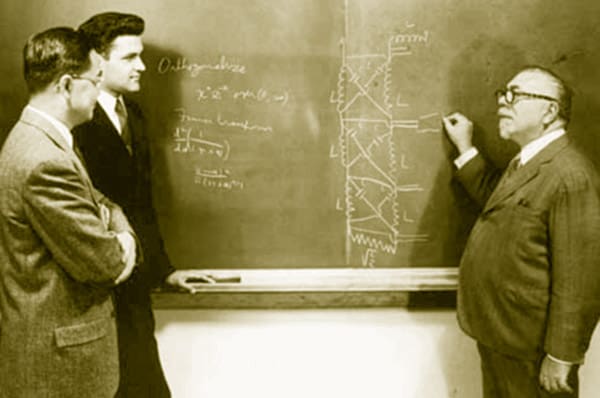

AI researchers in the 80s ran into a problem: the more their systems knew, the slower they ran. Whereas we know that people who learn more tend to get faster (and better in other ways) at whatever it is they’re doing. The solution, of course, is: Duh. the brain doesn’t work like a von Neumann… Continue reading Associative memories

Associative memories