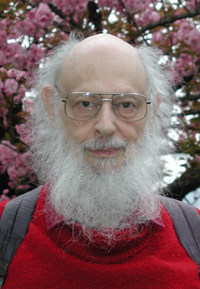

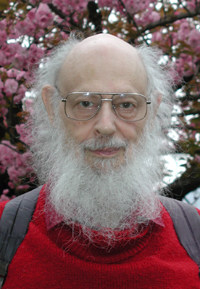

Ray Solomonoff, inventor of algorithmic probability and one of the founding fathers of AI, died December 7 after a brief illness.

I met Ray at the AI@50 conference at Dartmouth, given to celebrate the first AI conference and honor the five then surviving participants. He was very friendly, still sharp and insightful, and we had an email correspondence afterwards.

Five of the attendees of the 1956 Dartmouth Summer Research Project on Artificial Intelligence reunited at the July 2006 AI@50 conference. From left: Trenchard More, John McCarthy, Marvin Minsky, Oliver Selfridge, and Ray Solomonoff.

Here is what I wrote about Ray and algorithmic probability in Beyond AI:

Predicting Machines

One of the participants in the 1956 workshop, Ray Solomonoff, was interested in the problem of prediction: given a description of a possible world, say what happens next in it.

Clearly if you have a machine that can do this, you are well on your way to an AI. For each action in its repertoire, the AI uses the predictor to find out what would happen if it did that; then it needs only some way to choose which outcome is most desirable.

Solomonoff describes an incident at the meeting:

One day McCarthy gave a talk on “Well defined mathematical problems.” His thesis was that all mathematical problems could be formulated as problems of inverting Turing machines. [In other words, given a Turing machine and its output, find the input.]

McCarthy gave many examples to show how problems could be put into this form.

I asked him about the induction problem: “Suppose you are given a long sequence of symbols describing events in the real world. How can you extrapolate that sequence?”

Next day he said, “Suppose we were wandering about in an old house, and we suddenly opened a door to a room and in that room was a computer that was printing out your sequence. Eventually it came to the end of the sequence and was about to print the next symbol. Wouldn’t you bet that it would be correct?”

Suppose we are given a sequence to extrapolate. It would be nice if we could visit a very big house, which held all possible computers running every possible program, each printing out a sequence. Sooner or later we would find one that was printing our sequence.

This is where the nature of Turing machines comes in handy. Any Turing machine can be simulated by the same, universal, machine. Each machine can be described by a string of bits, its encoding for use in the universal machine. But a string of bits is also a number–so we can simply count through all possible Turing machines. Simulate each one, see if it matches the sequence you want to extrapolate, and take the first one to do so. This machine is the best theory of the information content in your sequence. (This concept was later rediscovered by the Russian mathematician Kolmogorov, and is commonly called Kolmogorov complexity today. But it Solomonoff did it first, with an inspiration from John McCarthy.)

This doesn’t work as it stands–Turing machines aren’t that well behaved. One might print out the first few symbols of your sequence, and then run and run without printing anything for a long long time. Is it stuck in an endless loop or will it eventually print the next correct symbol? But the notion gave Solomonoff enough traction on the problem to make some major strides in algorithmic information theory. These are now, 50 years later, becoming more and more clearly relevant to the problem of prediction in AI.

We can measure theories by their information entropy, for example. First, the theory itself can be expressed in bits, and its length measured (it can be a program that makes predictions, for example). Then, the value of its predictions can be measured in terms of the entropy of the states it predicts. We minimize both; minimizing the size of the theory is just Ockham’s Razor, and minimizing the prediction entropy is a measure of how accurate it is.

(Latter-day researchers taking this approach include Marcus Hutter, Universal Artificial Intelligence, and Eric Baum, What is Thought?)

I had been looking forward to meeting Ray again at AGI10. The world is a poorer place for his loss.