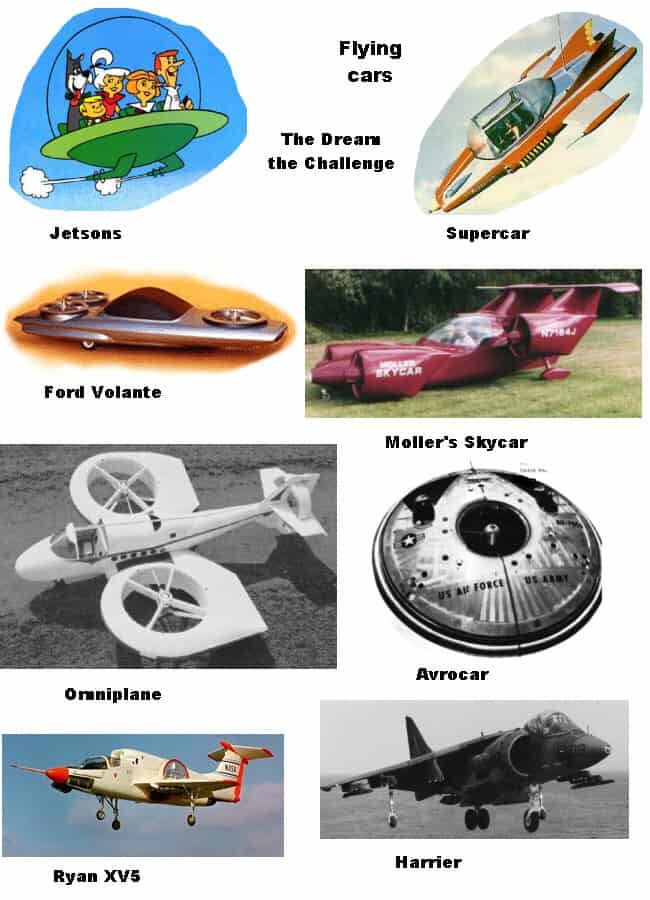

Previous in series: Why would I want a flying car? There have been many reasons urged against the concept of flying cars; let’s take stock of them here: They are impractical (and thus time spent on the concept is wasted) They would be noisy or unsightly They would be dangerous, to the occupants or to… Continue reading Why would I not want a flying car?

Why would I not want a flying car?